Cheat Sheet for AWS SAA-02

IAM

- IAM role policy only define which API actions can be made to that role.

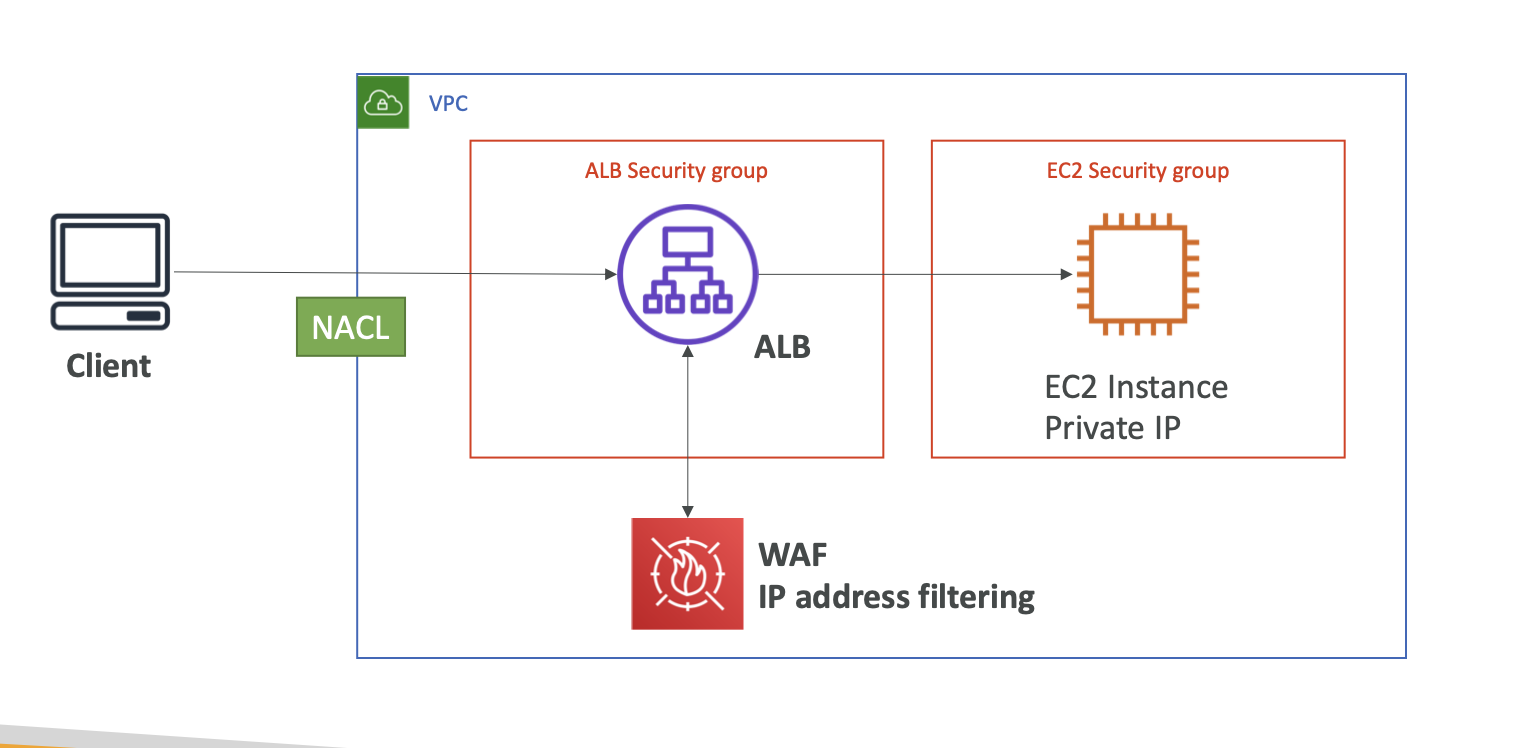

VPC

each account can create 5 VPC, and each vpc can create 200 subnets

private subnet => NAT Gateway => IGW

Direct Connect can provide 1Gbps to 10Gbps private network, but not encryption.

For accessing applications in different regions privately, you can configure inter-region VPC peering and create a VPC endpoint for specific service or application

NACLs & SG

- By default, new created SG only allow all connection from the outbound. New created NACLs deny both inbound and outbound connection

- However, default NACLs is configured to allow all traffic to flow in and out of the subnets to which it is associated.

- NACLs

- The lower number rule has precedence

- If a request comes into a web server in your VPC from a computer on the internet, your network ACL must have an outbound rule to enable traffic destined for ports 49152-65535 (ephemeral ports)

- SGs

- Inbound Rules: by default, disallow all traffic

- Outbound Rules: by default, allow all traffic

VPC Endpoint

- VPC Endpoint Policy

- VPC has a policy which by default allows all actions on all S3 buckets. We can restrict access to certainn S3 buckets and certain actions on this policy. In such cases, for accessing any new buckets or for any new actions, the VPC endpoint policy needs to be modified accordingly.

- VPC Endpoints does not supported outside VPC

- Two types

- Interface Endpoint

- is an elastic network interface (ENI) with a private IP address

- Gateway Endpoint

- Currently supports S3 and DynamoDB

- Interface Endpoint

- VPC Endpoint Policy

VPN

- Site-to-Site VPN provide encryption connection through Internet, not private network.

- Policy-based VPNs using one or more pairs of security associations drop already existing connections when new connection requests are generated with different security associations. This cause intermittent packet loss and other connectivity failures.

- Using Route-Based VPNs can get rid of connectivity issues of Policy-Based VPNs

- CloudHub

- To use AWS VPN CloudHub, one must create a Virtual Private Gateway with multiple Customer Gateways, each with a unique Border Gateway Protocol (BGP) Autonomous System Number (ASN)

- VPN CloudHub operates on a simple hub-and-spoke model that you can use with or without a VPC. This design is good for primary or backup connectivity between remote offices.

- The sites must not have overlapping IP ranges

- Each Customer Gateway must attach a public IP address. You must use a unique Border Gateway Protocol (BGP) Autonomous System Number (ASN) for each Customer Gateway.

- VGW by default acts as a Hub and spoke & no additional configuration needs to be done at the VGW end.

- Each router in each spoke needs to have BGP peering only with VGW & not with routers in other locations.

- This design is suitable if you have multiple branch offices and existing internet connections and would like to implement a convinient, potentially low-cost hub-and-spoke model for primary or backup connectivity between these remote offices

- Process for creating Site-to-Site VPN

- Specify the type of routing that you plan to use (static or dynamic)

- Update the route table for your subnet

- Static and Dynamic Routing

- the type of routing that you select can depend on the make and model of your customer gateway device.

- If your customer gateway device support Border Gateway Protocol (BGP), specify dynamic routing. If not, specify static.

- Reducing backup time of large data size by using VPN

- Enable ECMP on on-premises devices to forward traffic on both VPN endpoints

- ECMP (Equal Cost Multi-Path) can be used to carry traffic on both VPN endpoints, increasing performance and faster data transfer.

- ECMP needs to be enabled on Client end devices and not on the VGW end.

- Customization

- AWS Site-to-Site VPN offers cutomizable tunnel options including inside tunnel IP address, pre-shared key, and Border Gateway Protocol Autonomous System Number (BGP ASN). In this way, you can set up multiple secure VPN tunnels to increase the bandwidth for your applications or for resiliency in case of a down time. In addition, equal-cost multi-path routing (ECMP) is available with AWS Site-to-Site VPN on AWS Transit Gateway to help increase the traffic bandwidth over multiple paths.

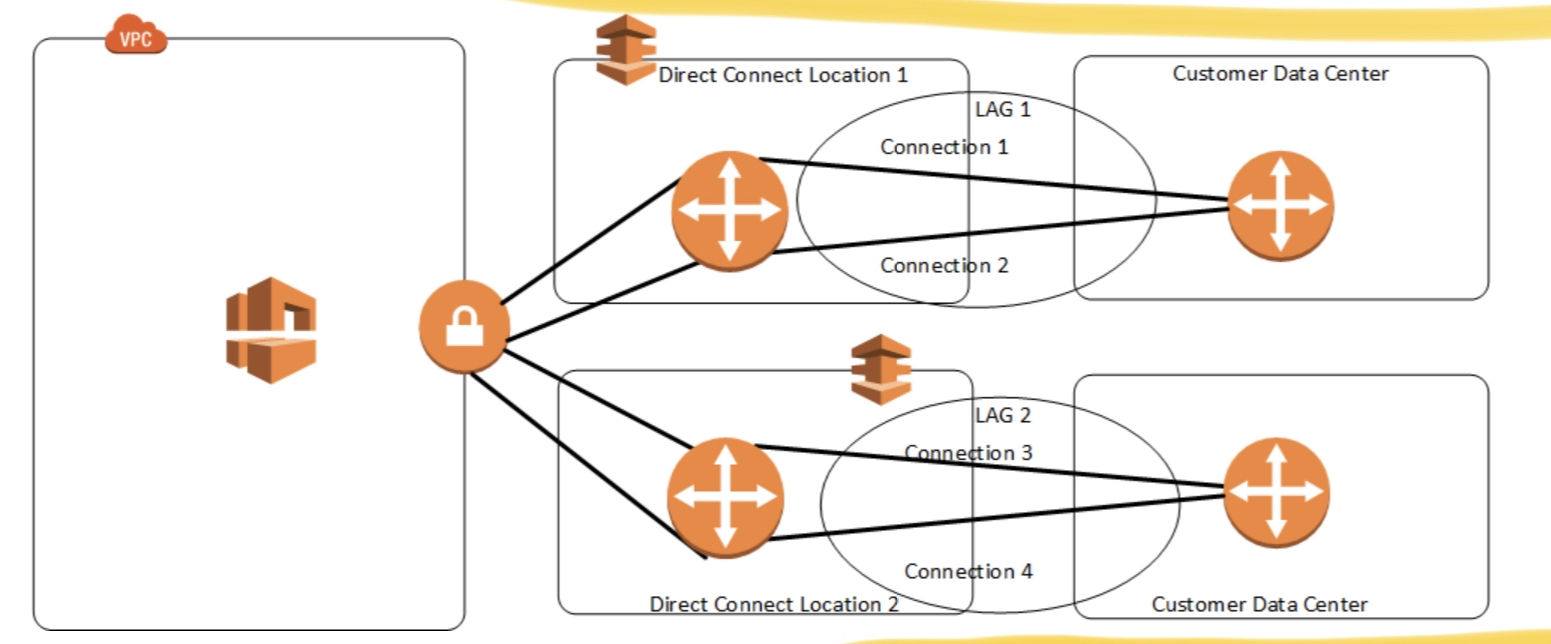

LAGs

- LAG stands for Link Aggregation Groups

- You can use multiple connections for redundancy.

- A LAG is a logical interface that uses the Link Aggregation Control Protocol (LACP) to aggregate multiple connections at a single AWS Direct Connect endpoint, allowing you to treat them as a single, managed connection.

- For higher throughput, LAG can aggregate multiple DX connections to give a maximum of 50 Gig bandwidth.

A NAT Gateway cannot send traffic over VPC endpoints, VPN connections, AWS Direct Connect, or VPC Peering connections.

You can associate secondary IPv4 CIDR blocks with your VPC. When you associate a CIDR block with your VPC, a route is automatically added to your VPC route tables to enable routing within the VPC (the destination is the CIDR block ad the target is local).

A subnet’s CIDR cannot be edited once created

Route Tables Target Naming

- VPC Peering: pcx-xxxx

- VPN: vgw-xxxx

- Direct Connect: vgw-xxxx

VPC Peering

- For the private connections between regions, VPC peering should be used. Then VPC endpoint allows users to access the DynamoDB service privately.

- doesn’t support transitive routing

Visting websites that belongs to the same region, the latency will be almost same.

Transit Gateway

- AWS Transit Gateway centralize outbound internet traffic from multiple VPCs using hub-and-spoke design.

Hybrid Option: Direct Connect + VPN

- VPN is needed as it creats an IPsec connection. Direct Connect is also required because it establishes a private connection with high bandwidth throughput.

- With Direct Connect + VPN, you can create IPsec-encrypted private connection.

For resources can be accessed from Internet

Need an IGW

The route table needs to be attched to an IGW that is created for the VPC

EC2

- Instance Type

- R: more ram

- C: more CPU

- M: balanced type

- I: more I/O

- G: more GPU

- Instance type offers different compute, memory, and storage capabilities, and is grouped in an instance family based on these capabilities.

- Launch Mode

- On-Demand

- Spot Instance

- Reserved

- Dedicated

- Placement Group

- Types

- Cluster (better for HPC)

- instances are all in one AZ

- cluster cannot be multi-AZ

- cluster is not available in t2.micro

- for HPC

- all the instances are placed in the same rack in the same AZ

- Spread (better for resolving simultaneous failures)

- can be multi-AZ

- cannot span across multiple regions

- supports a maximum of 7 running instances per AZ

- appropriate for availability scenarios

- the insatnces in different racks. Every rack has its own hardware and power source.

- Partition (better for Big Data)

- within one AZ

- each partition do not share the underlying hardware with each other

- Cluster (better for HPC)

- Placement Group supports migrating instances between palcement groups, but not merging them

- Placement Group cannot span multiple regions

- Types

- EC2 Hibernate

- Pre-warm EC2 instance

- The instance needs to be launched with an EBS root volume

- Note: You cannot hibernate an instance in an ASG or used by ECS

- The instance retains its private IPv4 addresses and any IPv6 addresses when hibernated and started.

- When an EC2 instance is in the Hibernate state, you pay only for the EBS volumes and Elastic IP addresses attached to it.

- Metadata

ASG

ASG launches new instances based on the configuration defined in Launch Configuration

AMI ID is set during the creation of launch configuration and cannot be modified.

Using Auto Scaling is good for

- Better fault tolerance

- Better availability

Default metric type for Simple Policy

- ALB Request Count Per Target

- Average Network In

- Average Network Out

Memory Utilization

Default metric type for Step Policy

- CPU Utilization

- Disk Reads

- Disk Read Operations

- Disk Writes

- Disk Write Operations

- Network In

- Network Out

ASG Scaling Policies

- Target Tracking Scaling Policy

- Maintains a specific metric at a target value

- ex: want average CPU to stay at 40%

- Simple Scaling Policy (based on a single adjustment)

- Scales when an alarm is breached

- ex: when a CloudWatch alarm is triggered (CPU > 70%), then add 2 units

- Scaling Policies with Steps (based on step adjustments)

- Scales when an alarm is breached, can escalates based on alarm value changing

- Main difference between Simple Scaling Policy and Step Simple Scaling Policy is the step adjustments

- The adjustments vary based on the size of the alarm breach

- ASG react to the lower and upper bound metrics value

- AWS recommends Step Scaling Policies as a better choice than simple scaling polices.

- Scheduled Actions

- Target Tracking Scaling Policy

You can have multiple scaling policies in force at the same time

ex: multiple target tracking scaling policies for an ASG, provided that each of them uses a different metric.

If two policies are executed at the same time, EC2 Auto Scaling follows the policy with the greater impact. For example, if you have one policy to add two instances and another policy to add four instances, EC2 Auto Scaling adds four instances when both policies are triggered simultaneously.

Termination Policy

- OldestInstance

- Terminate the oldest instance in the group

- NewestInstance

- Terminate the newest instance in the group

- OldestLaunchConfiguration

- Terminate instances that have the oldest launch configuration

- ClosestToNextInstanceHour

- Terminate instances that are closest to the next billing hour

- Default

- Find the AZ which has the most number of instances

- If there are multiple instances in the AZ, delete the one with the oldest launch configuration

- If there are multiple instances, choose the instance which are closest to the next billing hour

- If there are multiple instances, select one of them at random

- OldestInstance

ASG tries the balance thee number of instances across AZ by default

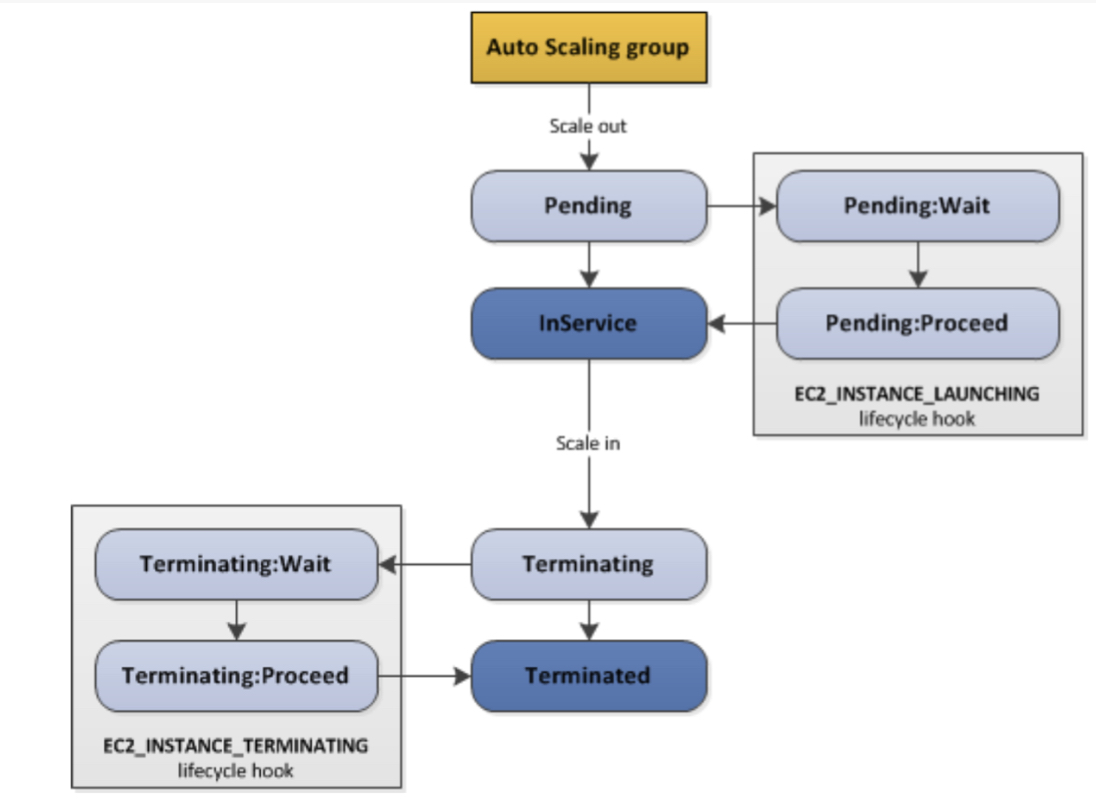

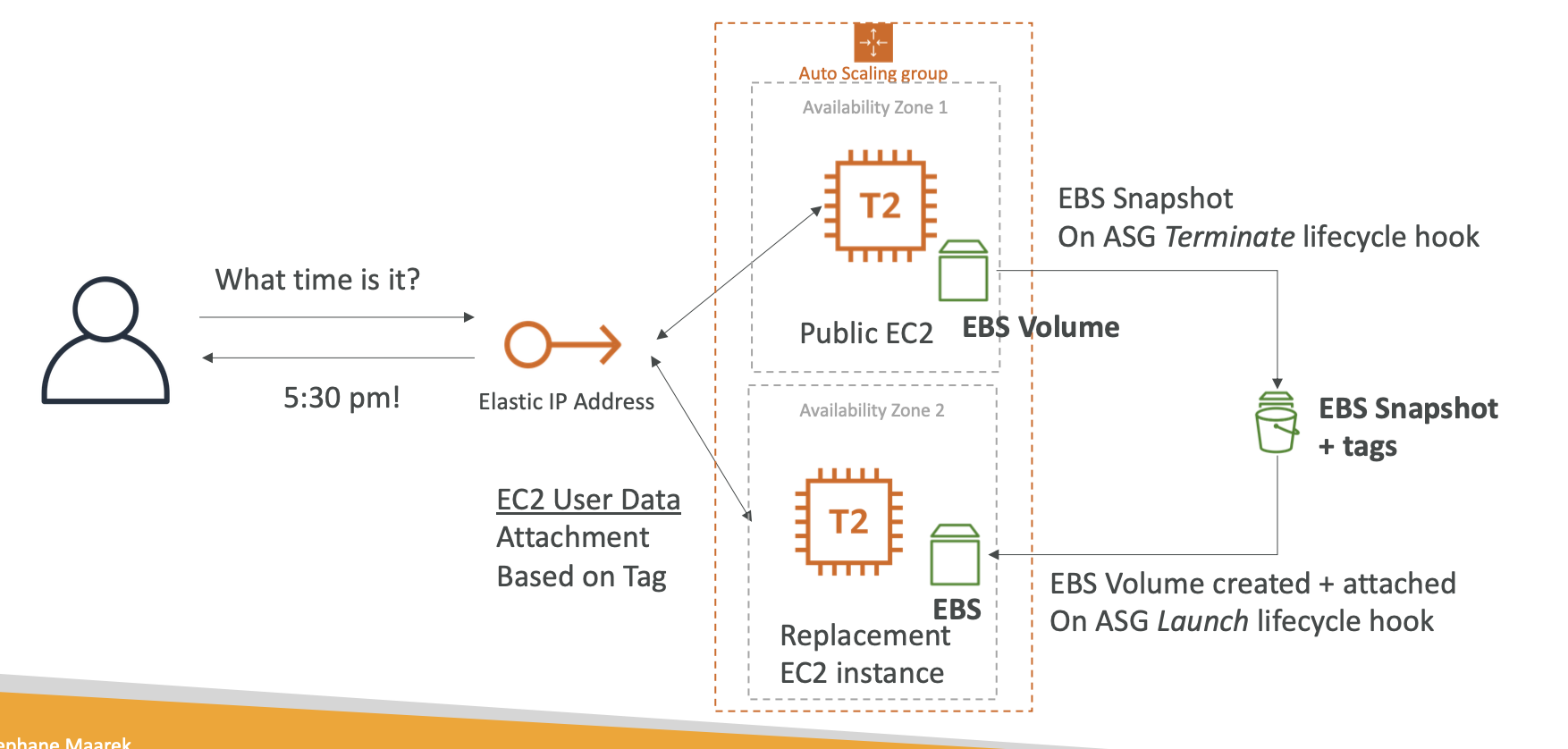

Lifecycle Hooks

- By default as soon as an instance is lanched in an ASG it’s in service

- You have the ability to perform extra steps before the instance goes in service (Pending State)

- You have the ability to perform some actions before the instance is terminated (Terminating State)

Salcing Cooldown

- Cooldown period helps to ensure that your ASG doesn’t luanch or terminate additional instances before the previous scaling activity takes effect

- We can create cooldowns that apply to a specific scaling policy

IAM roles attached to an ASG will get assigned to EC2 instances

ASG is free, but the underlying resources are not free

better scaling rules that are directly managed by EC2

- Target Average CPU Usage

- Number of requests on the ELB per instance

- Average Network In

- Average Network Out

By default, Amazon EC2 Auto Scaling health checks use the results of the EC2 status checks to determine the health status of an instance

Health Check Grace Period

- AS instance that has just come into service needs to warm up before it can pass the health check.

- EC2 AG waits until the health check grace period ends before checking the health status of the instance

ASG supports a mix of On-Demand & Spot instances, which helps design a cost-optimized solution without impacting the performance. You can choose the percentage of On-Demand & Spot instances based on the application requirement (OnDemandPercentageAboveBaseCapacity)

EBS

- Storage Type

- gp2:

- general purpose, max 16,000 IOPS, max 250 MB/s throughput

- IOPS is increased with volume size

- io1:

- high IOPS, max 64,000 IOPS, max 1,000 MB/s throughput

- IOPS is not increased with volume size

- st1:

- high throughput, HDD, max 500 IOPSmax 500MB/s throughput

- sc1:

- lowest price, HDD, max 250 IOPS, max250MB/s throughput

- gp2:

- EBS Snapshot Lifecycle

- You cann use Amazon Data Lifecycle Management (DLM) to automate the creation, retention, and deletion of snapshots taken to back up your EBS volumes.

- Automating snapshot management helps you to

- Protect valuable data by enforcing a regular backup schedule

- Retain backups as required by auditors or internal compliance

- Reduce storage costs by deleting outdated backups

- You can back up the data on your EBS volumes to S3 by taking point-in-time snapshots. Snapshots are incremental backups, which means that only the blocks on the device that have changed after you most recent snapshot are saved. This minimizes the time required to create the snapshot and saves on storage costs by not duplicating data. When you delete a snapshot, only the data unique to that snapshot is removed. Each snapshot contains all of the information needed to restore your data (from the moment when the snapshot was taken) to a new EBS volume.

- EBS Performance Tips

- EBS-optimized instance

- EBS-optimized instance uses an optimized configuration stack and provides additional, dedicated capacity for EBS I/O. This optimization will minimize contention between EBS I/O and other traffic from your instance.

- Use a Modern Linux Kernel

- Use RAID 0 to Maximize Utilizationn of Instance Resource

- You can join multiple gp2, io1, st1, or sc1 volumes together in a RAID 0 configuration to use available bandwidth for these instances.

- EBS-optimized instance

- EBS Encryption

- Ensuring the security of both data-at-rest and data-in-transit between an instance and its attached EBS storage.

- You can enable encryption while copying a snapshot from an unencrypted snapshot

- You cannot remove encryption from an encrypted snapshot

- You cannot create an encrypted snapshot from an uncrypted volume

- CloudWatch Metrics for EBS

- VolumeReadBytes / VolumeWriteBytes

- VolumeReadOps / VolumeWriteOps

- VolumeTotalReadTime / VolumeTotalWriteTime

- VolumeIdleTime

- VolumeQueueLength

- VolumeThroughputPercentage

- VolumeConsumedReadWriteOps

- BurstBalance

VolumeRemainingSize

- EBS Elastic Volumes

- With EBS Elastic Volumes, you can increase the volume size, change the volume type, or adjust the performance of your EBS volumes. If you instance supports Elastic Volumes, you can do so without detaching the volume or restarting the instance.

Instance Store

- Data in the instance store is lost under any of the following circumstances

- The underlying disk drive fails

- The instance stops

- The instance terminates

- Data will not lost during reboot

- You can only specify the size of your instance store when you launch an instance. You can’t change it or attach new after you’ve launched it.

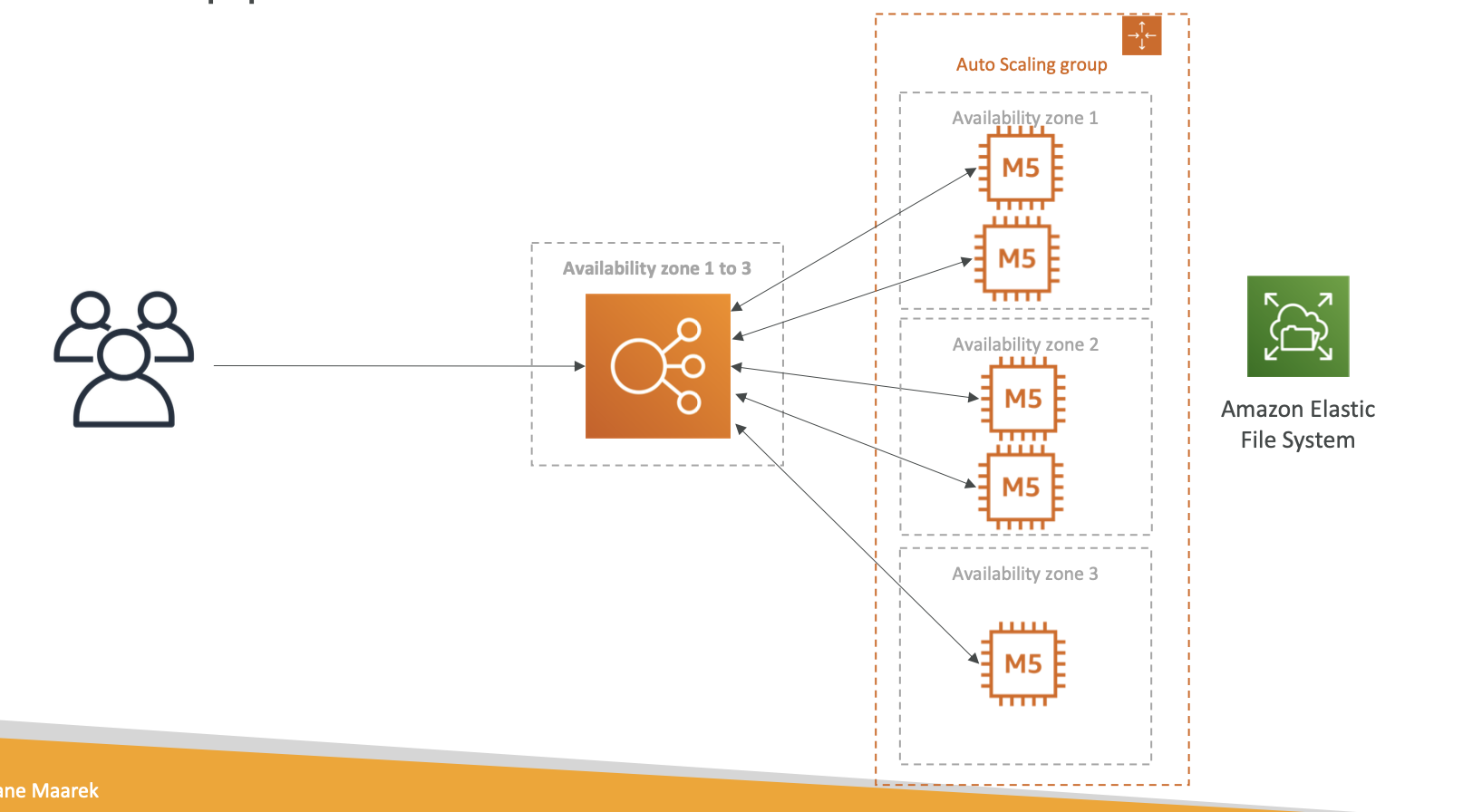

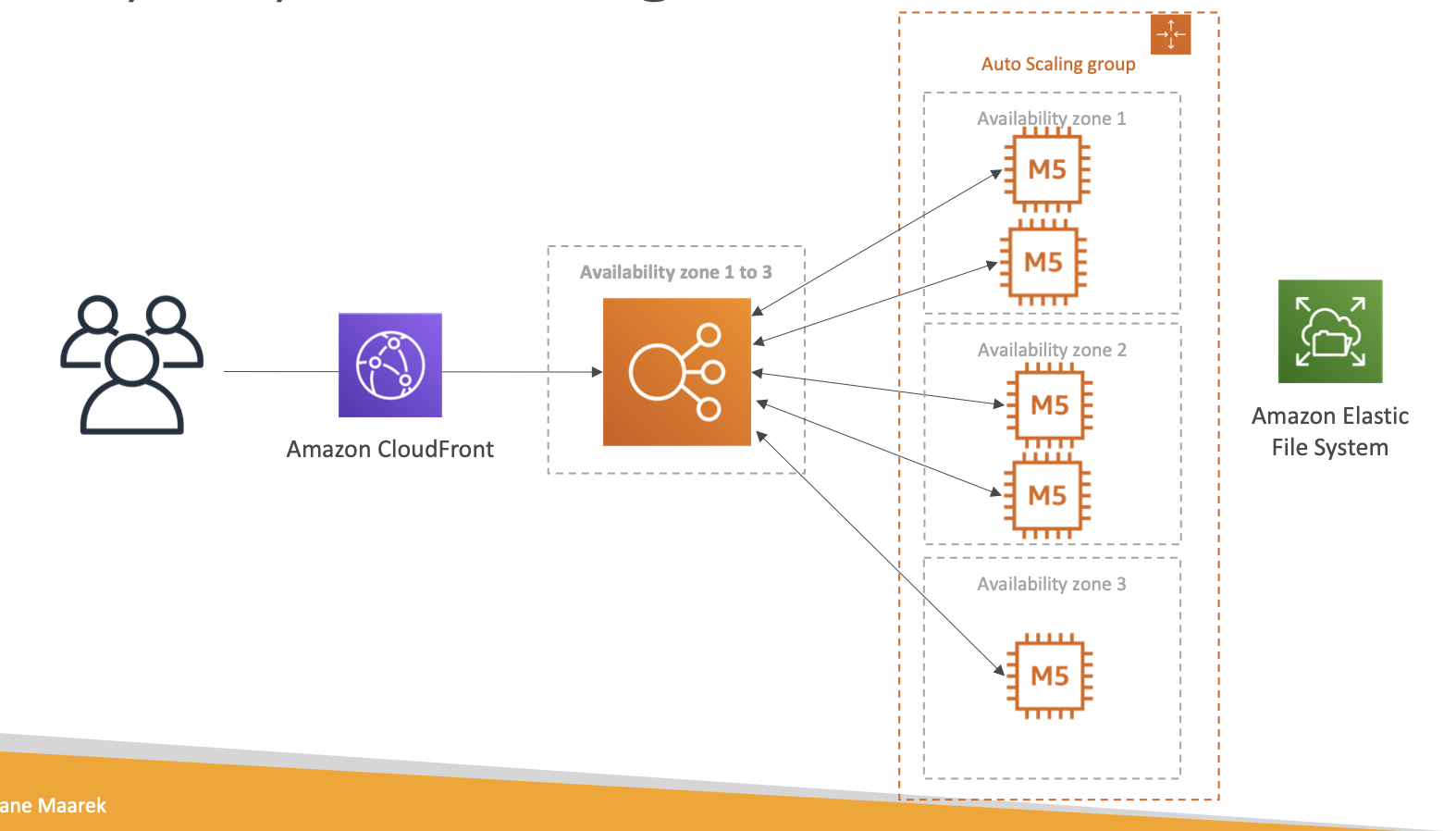

EFS

- EFS works with EC2 instances in multi-AZ

- Uses SG to control access to EFS

- Performance Mode (set at EFS creation time)

- General purpose (default): latency-sensitive use cases (web server, CMS)

- Max I/O - higher latency, throughput, highly parallel (big data, media proessingn)

- The performance mode of an EFS cannot be changed after the file system has been created

- Throughput Mode

- Bursting Throughput: With Bursting Throughput mode, a file system’s throughput scales as the amount of data stored in the EFS standard or one zone storage class grows.

- Provisioned Throughput: Provisioned throughput is available for applications with high throughput to storage

- Storage Class (life cycle management feature - move files after N days)

- Standard: for frequently accessed files

- Infrequent access (EFS-IA): cost to retrieve files, lower price to store

- Can leverage EFS-IA for cost saving

- Note: An EFS file system can only have mount targets in one VPC at a time

- When you use a VPC peering connection or VPC Transit Gateway to connect VPCs, EC2 instances in one VPC can access EFS in another VPC, even if the VPCs belong to different accounts.

- Mount targets can have associated SGs

- And SG on mount targets need inbound rules for allowing TCP 2049 from SG of EC2

- Encryption of data at rest can only be enabled during file system creation

- NFS is nont an encrypted protocol

- When you need encryption in transit, you can use Amazon EFS mount helper during mounting

- Diff between EFS and EBS

- Availability and durability

- EFS

- Data is stored redundantly across multiple AZs

- EBS

- Data is stored redundantly in a single AZ

- EFS

- Acess

- EFS

- thounds of EC2 instances from multi-AZs can connect

- EBS

- single EC2 instance in a single AZ can connect

- EFS

- Use Cases

- EFS

- Big data and analytics, media processing, content management, web serving

- EBS

- Boot volumes, transcational and NoSQL databases, data warehousing, and ETL

- EFS

- Availability and durability

- You can mount EFS over VPC connections by using VPC peering within a single AWS Region, not support inter-region VPC peering.

- Mount Target

- You can create one mount target in each AZ (recommended way)

- If the VPC has multiple subets in an AZ, you can create a mount target in only one of those subnets. All EC2 instances in the AZ can share the single mount target

Serverless

- S3

- Athena

- DynamoDB

- Lambda

- SNS, SQS

- Aurora Serverless

- API Gateway

S3

4 9’s avaialbility, and 11 9’s durability

S3 is a managed service and not part of VPC. So, VPC flow logs does not report traffic sent to the S3 bucket.

Storage Class

- Standard

- Standard-IA

- DR, backup

- access less than a month, additional retrieve fee needs

- One Zone-IA

- only exist in one AZ, additionnal retrieve fee needs

- secondary backups for on-premise data

- Intelligent Tiering

- using ML to analyse your usage and determine the appropriate storage class

- When the access pattern to web application using S3 storage buckets is unpredictable, using intelligent tier.

- Intelligent Tiering storage class includes two access tiers

- frequent access

- infrequent access

- Intelligent Tiering storage class has the same performance as that of Standard storage class

- Glacier

- for long-term cold storage

- Glacier Deep Archive

- the lowest cost storage class.

- Data retrieval time is 12 hours

Strong Consistency

- What you write is what you will read, and the results of a LIST will be an accurate reflection of what’s in the bucket. This applies to all existing and new S3 objects, works in all regions, and is available to

you at no extra charge!

- What you write is what you will read, and the results of a LIST will be an accurate reflection of what’s in the bucket. This applies to all existing and new S3 objects, works in all regions, and is available to

CRR & SRR

- must turn on “versioning” on source and destination bucket

- replication cannot be chaining

- only replicate new objects, do not be retroactive

- CRR can copy encrypted objects across buckets in different regions

- Users can choose one or more KMS keys in the replication rule.

- re-encryption is not required for the CRR

Lifecycle Management

- help to move objects to different storage class or delete objects in time

- Actions for Lifecycle Management

- Transition actions

- Expiration actions

Performance

- if you use KMS, your S3’s performance may impacted by KMS

How to improve performance

- Upload

- Multi-Part Uploads

- S3 Transfer Acceleration (compatible with Multi-Part Upload)

- using edge location to speed up

- Download

- S3 “Byte-Range” HTTP header in a GET request to download the specified range bytes of an object

- Upload

S3 Select & Glacier Select

- Retrieve specific data using SQL by performing server side filtering

- S3 Glacier Select can directly query data from S3 Glacier & restoration of data to the S3 bucket is not required for querying this data.

- For using S3 Select, objects need to be stored in an S3 bucket with CSV, JSON, or Apache Parquet format. GZIP & BZIP2 compression is supported with CSV or JSON format with server-side encryption.

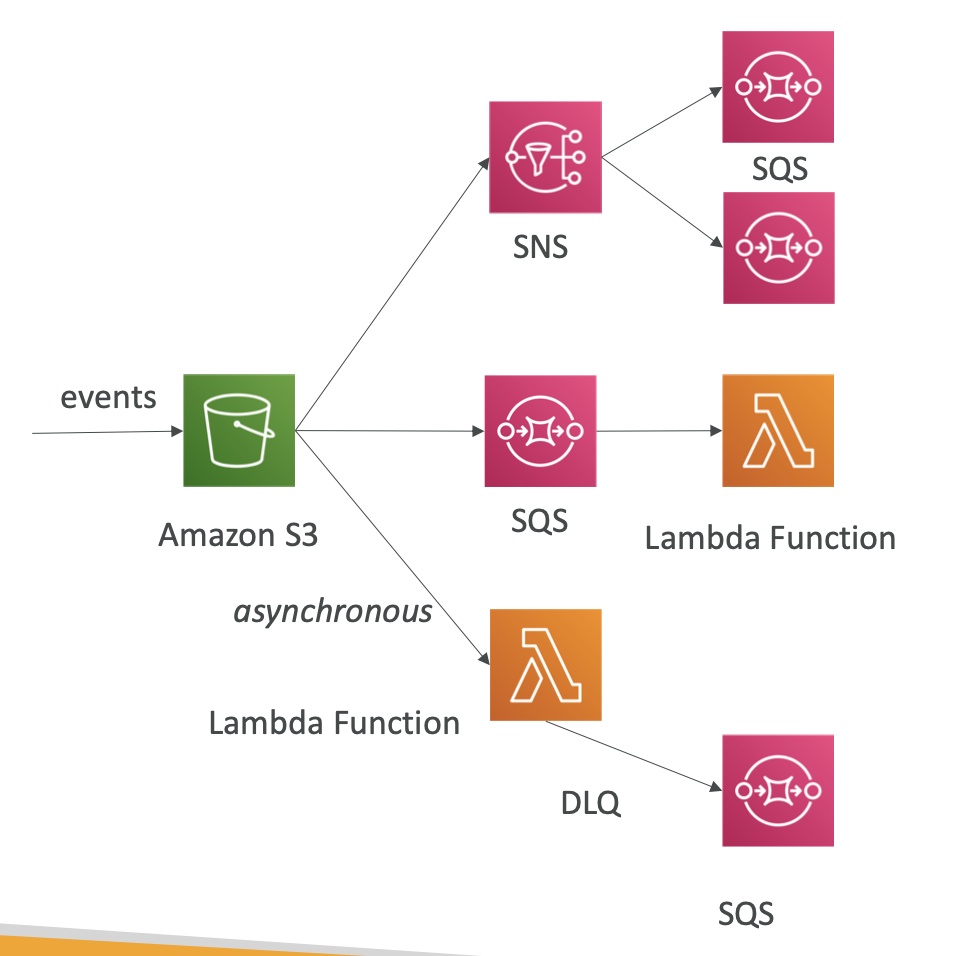

Event Notification

- 3 targets for events: SNS, SQS, Lambda

- If you don’t want miss any notifications, you need to enable versioning

Make a static website

Properties choose “use this bucket to a static website”

Permission “Public Access Settings”

Permission “Bucket Policy”

Bucket Policy

- If a bucket policy contains Effect as Deny. You must whitelist all the IAM resources which need access on the bucket. Otherwise, IAM resources cannot access the S3 bucket even if they have full access.

- Server Access Logging

- Server Access Logging provides detailed records for the requsets that are made to a bucket. Can be useful in security and access audits

- Metadata

- System Metadata

- such as object creation date is system controlled where only S3 can modify the value

- User-Defined Metadata

- When uploading an object, you can also assign metadata to the object. You provide this optional information as a name-value pair when you send PUT or POST request to create the object. When you upload objects using the REST API, the optional user-defined metadata names must begin with “x-amz-meta-“ to distinguish them from other HTTP headers.

- URLs for accessing objects

- Virtual Hosted-Style Requests

- Path-Style Requests

Old version of an existing object is also be charged by AWS.

Types of SSE (at-rest)

- SSE-S3

- SSE-KMS

- SSE-C

S3 bucket owner can create Pre-Signed URLs to upload images to S3

Object ACLs

Object level, not bucket level

Using Object ACLs provides a granular control on each file in S3 bucket

- S3 Select vs Athena

- S3 Select only support simple SELECT statement, no joins or subqueries

- Athena supports full standard SQL

With version enabled S3 buckets, each version of an object can have a different retention period

S3 CORS

- what is different origin

- different doamin or subdomain

- different protocol or different ports

- the limit number of CORS is 100

- The scheme, the hostname, and the port values in the Origin request header must match the AllowedOrigin elements in the CORSRule.

- For example, if you set the CORSRule to allow the origin “http://www.example.com", then both “https://www.example.com" and “http://www.example.com:80" origins in your request don’t match the allowed origin in your configuration. By the way, “www.example.com" and “example.com” are not the same hostname

- CORS configuration can use JSON or XML format.

- CORS only supports GET, PUT, POST, DELETE, and HEAD.

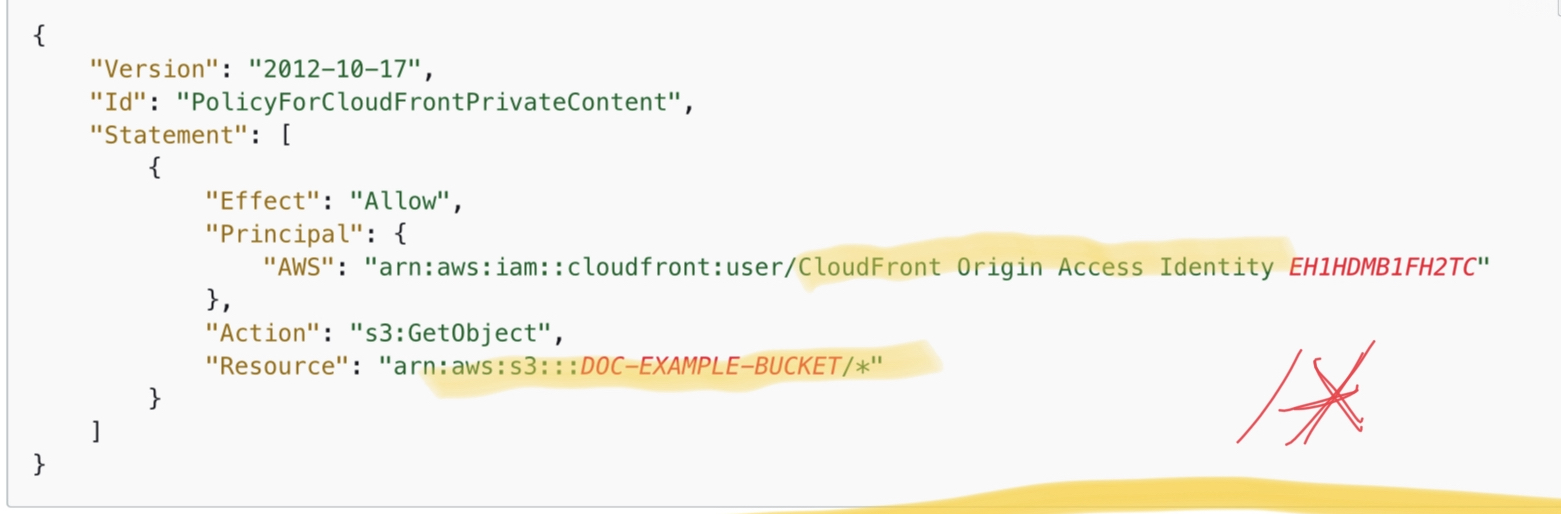

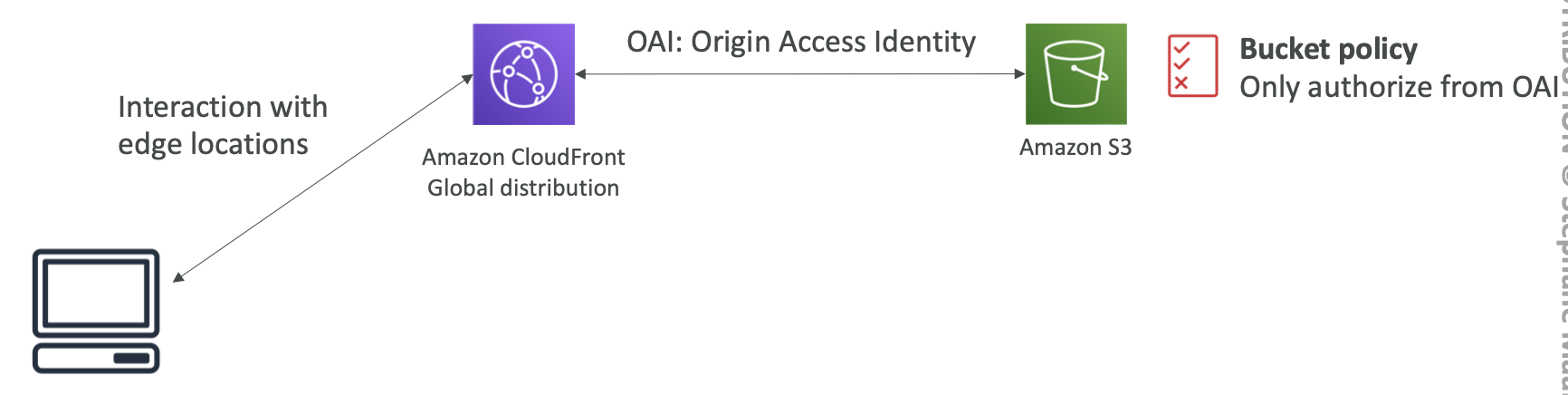

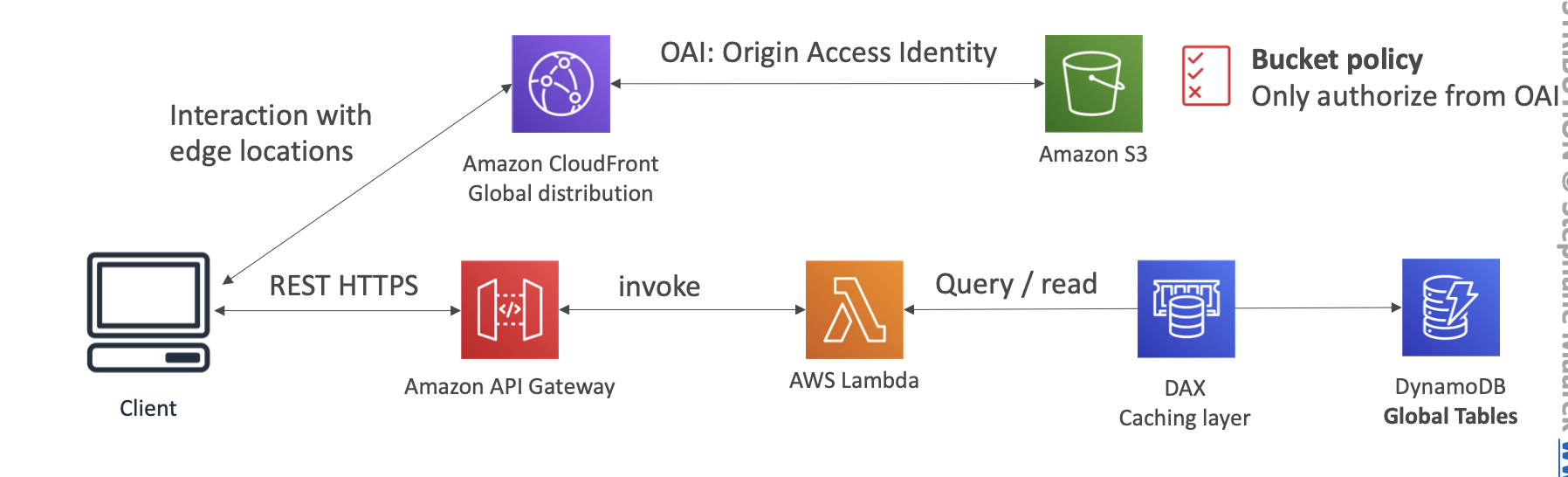

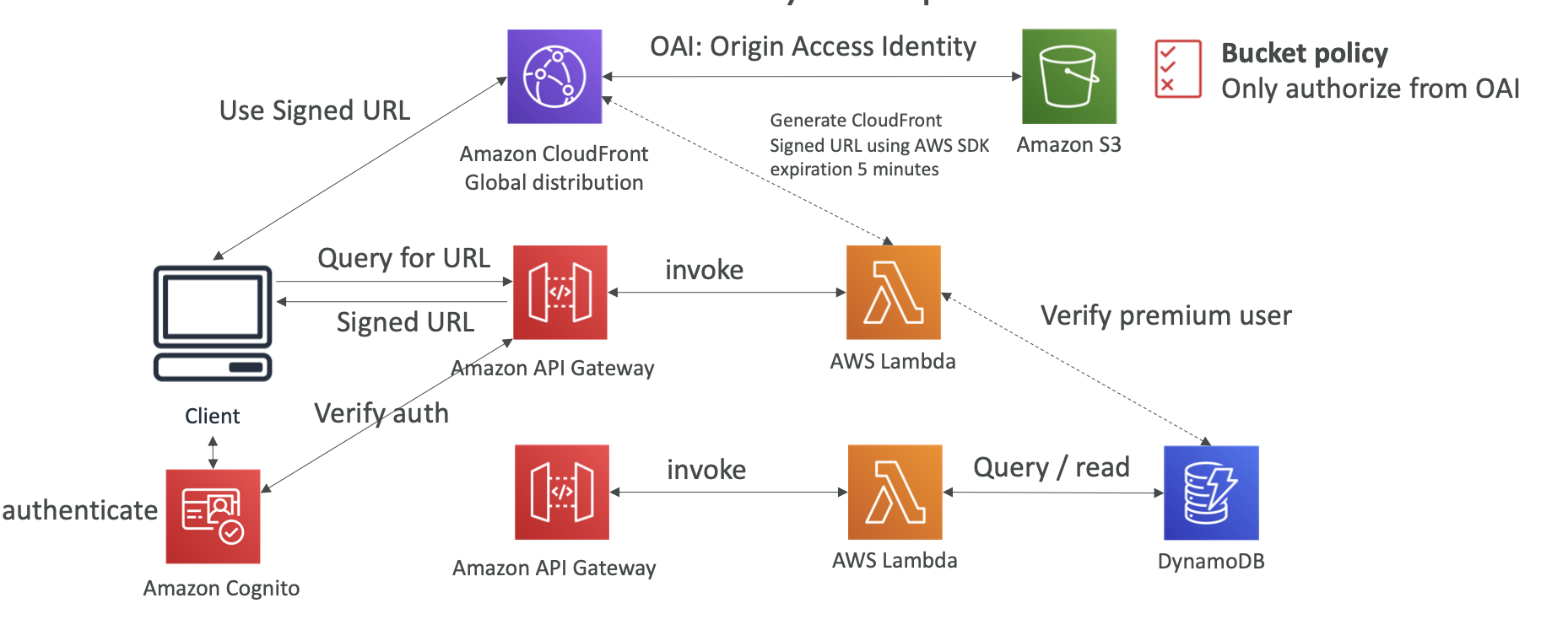

S3 bucket policy should allow the “S3:GetObject” action if the Principal comes from the CloudFront Origin Access Identity.

Bucket Policy for OAI

- Object Lock

- Object Lock should be enabled to store objects using write once and read many (WORM) models.

- You can prevent the S3 objects from being deleted or overwritten for a fixed amount of time or indefinitely.

- Note: Versioning does not prevent objects from being deleted or modified.

- Event Notification

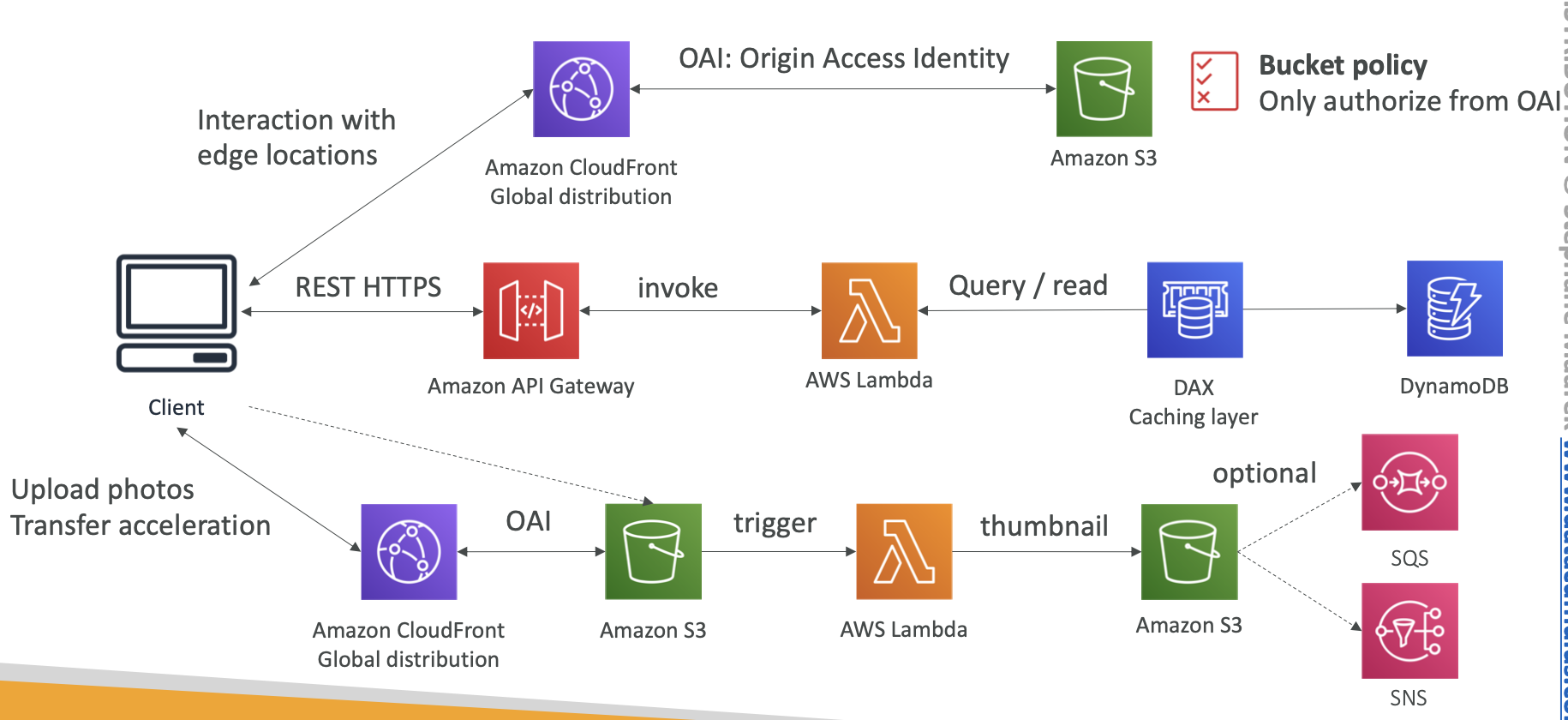

- you can use Event Notification from the S3 bucket to invoke the Lambda fuction whenever the file is uploaded.

S3 Glacier

You cannot directly upload files to Glacier through S3 console

Retrieval

- Expedited Retrieval

- Expedited retrievals allow you to access data in 1-5 mins.

- Expedited retrievals allow you to quickly access your data when occassional urgent requests for a subnet of archives are required

- Standard Retrieval

- need 3-5 hours

- Bulk Retrieval

- need 5-12 hours

- Expedited Retrieval

Vault Lock

- S3 Glacier Vault Lock allows you to easily deploy and enforce compliance controls for individual S3 Glacier vaults with a vault lock policy.

- You can specify controls such as “write once read many” (WORM) in a vault lock policy and lock the policy from the future edits.

- Once locked, the policy can no longer be changed.

Objects in Glacier Deep Archive

- You cannot directly move objects to another storage class. These need to be restored first & then copied to the disired storage class.

- S3 Glacier console can be used to access vaults and objects in them. But is cannot be used to restore the objects.

Athena

- charged per query and amount of data scanned

- for SSE-KMS, Athena can determine the proper materials to decrypt the dataset when creating the table. You do not need to provide the key information to Athena.

- Athena can create the table for the S3 data encryption by SSE-KMS

- Workgroup

- A separate Workgroup can be created based upon users, teams, applications or workloads. This will minimize the amount of data scanned for each query, improve performance and reducing cost.

- Using Workgroup to isolate queries for teams, applications, or different workloads.

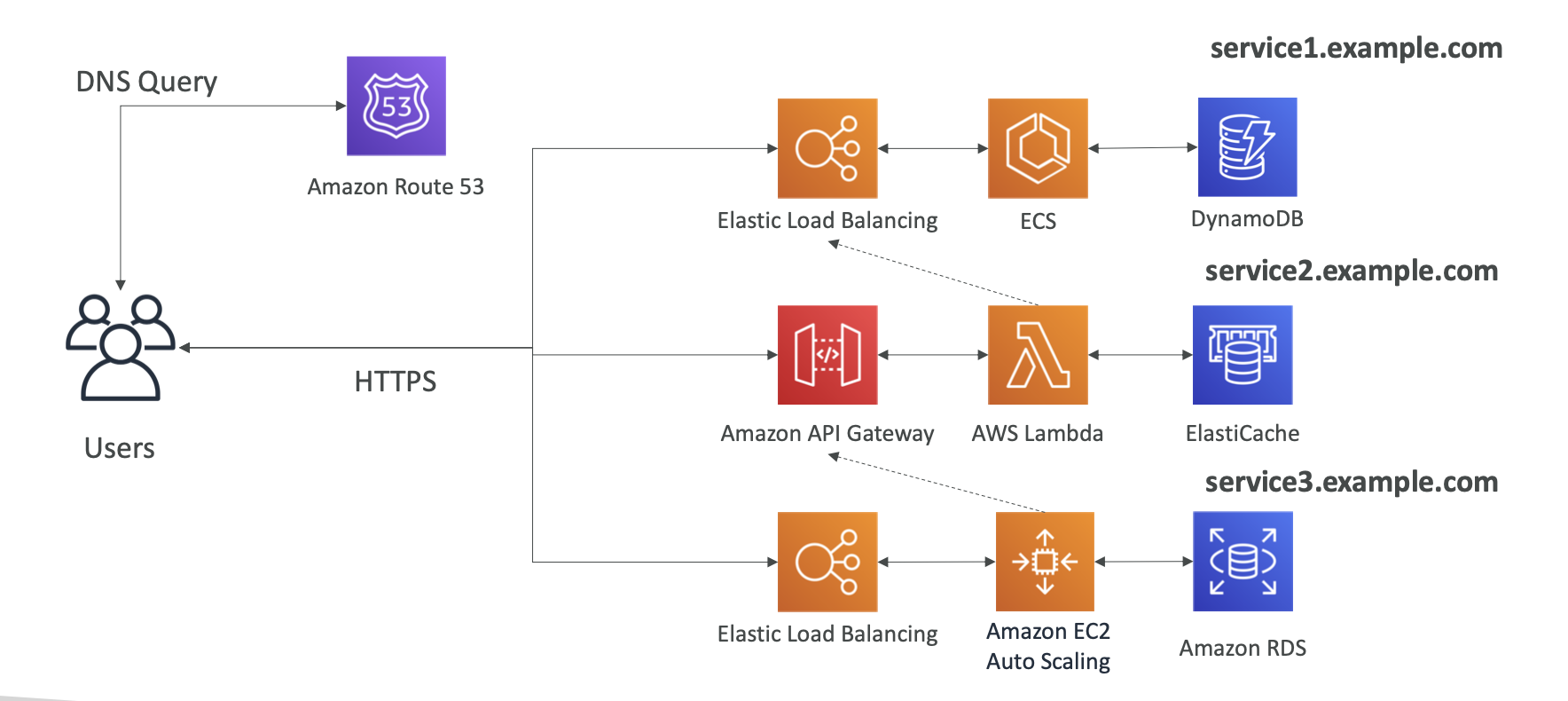

Route 53

is a global service, not regional

is highly available and scalable DNS web service. You can use Route 53 perform three main functions

- Register domain names

- Route internet traffic to the resources for your domain

- Check the health of your resources

Route 53 is not used for load-balancing traffic among individual resource instances

Record Type

- A: convert to an IPv4 address

- AAAA: convert to an IPv6 address

- CNAME: convert to another hostname

- only for non root domain

- Alias: convert to a specific AWS resource

- works for root domains and subdomains

- free of charge

- native health check

Alias Records for your domain and subdomain

- Instead of using IP addresses, the alias records use S3 website endpoints.

- S3 maintains a mapping between the alias records and the IP addresses where S3 buckets reside.

- A record set can only have one Alias Target

DNS TTL

- it enables the client to cache the response of a DNS query

Routing Policy

- Simple Routing Policy

- if you set multiple values, it will return a random one

- using command(dig) will find multiple values returned

- can’t attach a Health Check

- Weighted Routing Policy

- only see one value returned, not multiple values

- can attach a Health Check

- Latency Routing Policy

- Failover Routig Policy

- must attach a Health Check

- Choose one for primary, and another for secondary

- GeoLocation Routing Policy

- Multi Value Routing Policy

- Multi Value almost like Simple Policy, the only diff is the Healch Check

- Simple Routing Policy

Traffic can route to the following services

- CloudFront

- EC2

- Beanstalk

- ELB

- RDS

- S3

- WorkMail

Reasons for displaying “Server not found” error

- You didn’t create a record for the domain or subdomain name

- You created a record but specified the wrong value

- The resource that you’re routing traffic to is unavailable

Logging and Monitoring Route 53

Monitoring Health Checks using CloudWatch

- By default, metric data for Route 53 health checks is automatically sent to CloudWatch at 1m intervals

Monitoring Domain Registrations, including

- Status of new domain registrations

- Status of domain transfers to Route 53

- List of domains that are approaching the expiration date

Logging Route 53 API calls with CloudTrail

Types of Route 53 Health Checks

- Health Checks that monitor an endpoint

- Health Checks that monitor other health checks

- Health Checks that monitor CloudWatch alarms

There is no interface endpoint for Route 53

Route 53 is not inside the AWS backbone

ELB

An ELB must have at least two AZs, and ELB can’t cross region

Types of ELB

- ALB

- Layer 7

- WAF can be attached to ALB

- SG can attach to it

- ALB do not have the spot type

- Target Groups:

- EC2 instance

- ECS tasks

- Lambda functions

- IP addresses

- ALB can route to multiple target groups

- The application must check X-Forward-For in HTTP request header for requiring IP address of users

- ALB does not charge users based on the number of enabled AZs

- Support dynamic mapping

- NLB

- Layer 4 (Transport)

- SG cannot attach to it

- Support dynamic mapping

- Gateway LB

- Layer 3 (Network)

- CLB (legacy)

- It does not support dynamic mapping

- ALB

Troubleshooting

- LB shows 503 means there is no registered target

- if LB cannot connect to your application, please check SG

Load Balancer Stickiness

- Works for ALB and CLB

- Use case: make sure users don’t lose their session data

Cross Load Balancing

- ALB

- Always on

- no charge for inter AZ data

- NLB

- default off

- you pay inter AZ data if enable

- CLB

- no charge for inter AZ data if enable

- ALB

SSL/TLS

- using SNI to resolve multiple SSL certificates onto one web server

- only works for ALB & NLB, CloudFront

- for CLB, must use multiple CLB for different hostnames

TLS listeners

To use a TLS listener, you must deploy at least one server certificate on your load balancer. The load balancer uses a server certificate to terminate the front-end connection and then to decrypt requests from clients before sending them to the targets

ELB uses a TLS negotiation configuration, known as security policy, to negotiate TLS connections between a client and the load balancer.

A security group is a combination of protocols and ciphers.

The protocol establishes a secure connection between a client and a server and ensures that all data passed between the client and your load balancer is private.

A cipher is an encryption algorithm that uses encryption keys to create a coded message.

NLB does not support a custom security policy

NLB requires one certificate per TLS connection to encrypt traffic between client & NLB annd forward decrypted traffic to target servers. Using AWS Certificate Manager is a preferred option, as these certificates are automatically renewed on expiry

Connection Draining

- when existing connection shows unhealthy, the users must wait the response, and this period means draining mode. The new requests from other users will

- redirect to other targets.

- If you set draining value is 0, it means the connection will be droped, and the user will receive an error from ELB

- CLB: names Connection Draining

- ALB & NLB: in Target Group and names Deregistration Delay

ELB rules of Traffic

- Listener: incoming traffic is evaluated by ports

- Rules: listener then will invoke rules to decide what to do with the traffic

- Target Groups

- Health Check

- ELB doesn’t terminate unhealthy instances, it just redirect traffic to the healthy one

- for NLB and ALB, Health Checks locate in Target Group

- Monitor ALB

- CloudWatch metrics

- Access logs

- Request tracing

- CloudTrail logs

Reasons for connection failure of Internet-facing load balancer

- Your internet-facing load balancer is attached to a private subnet

- A SG or NACL does not allow traffic

Target Health Status of a Registered Target

- Initial

- Healthy

- Unhealthy

- Unused

- draining (deregistration)

Reasons for unhealthy

- A Security Group of the instance does not allow traffic

- NACLs does not allow traffic

- The ping path does not exist

- The connection times out

- The target did not return a successful response code

Target Type (you cannot change its target type)

- instance

- The targets are specified by instance ID

- ip

- The targets are specified by IP address

- You can’t specify publicly routable IP addresses

- If you specify targets using an instance ID, traffic to instances using the primary private IP address specified in the primary network interface for the instance

- If you specify targets using IP addresses, you can route traffic to an instance using any private IP address from one or more network interfaces.

- instance

Integration with ECS (Dynamic Mapping)

- Since ALB/NLB supports dynamic mapping. We can configure the ECS service to use the load balancer, and a dynamic port will be selected for each ECS task automatically. With Dynamic mapping, multiple copies of a task can run on the same instance

ELB + ASG is good for fault tolerance

- Using ELB with ASG, both should be in the same region and launch in the same VPC.

CloudWatch metrics

- Latency

- The total time elapsed, in seconds, from the time the load balancer sent the request to a registered instance until the instance started to sennd the response headers.

- RequestCount

- The number of requests completed or connections made during the specified interval

- Latency

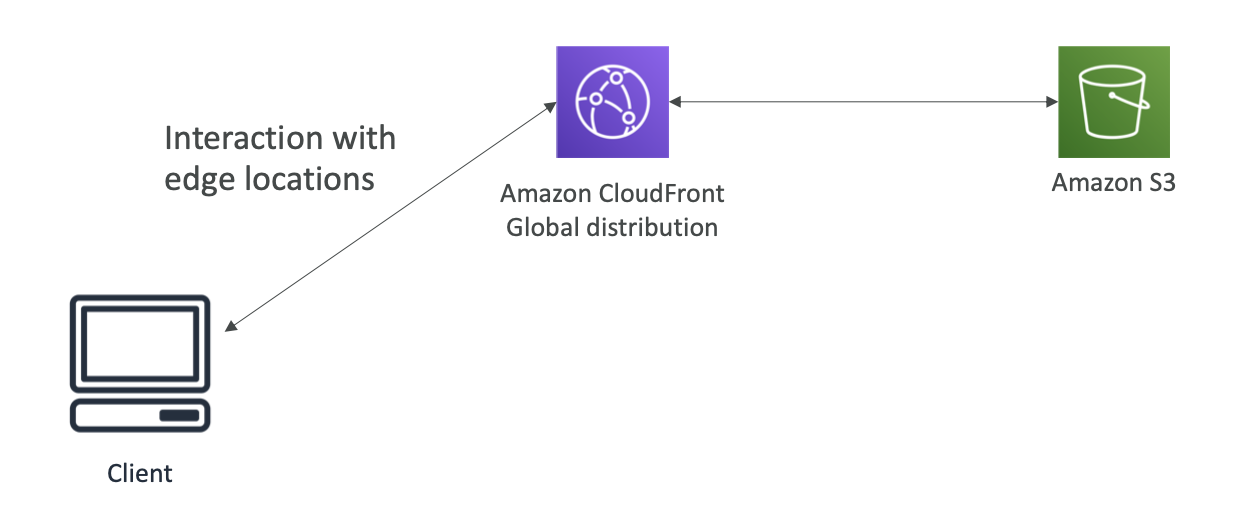

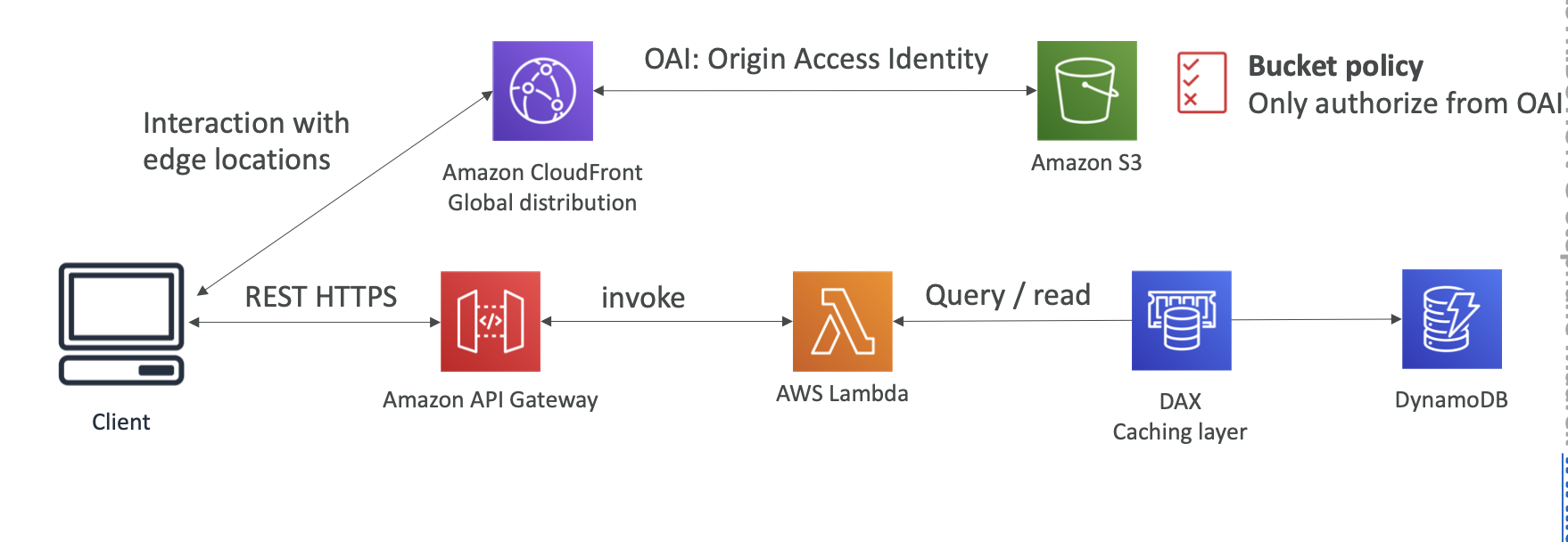

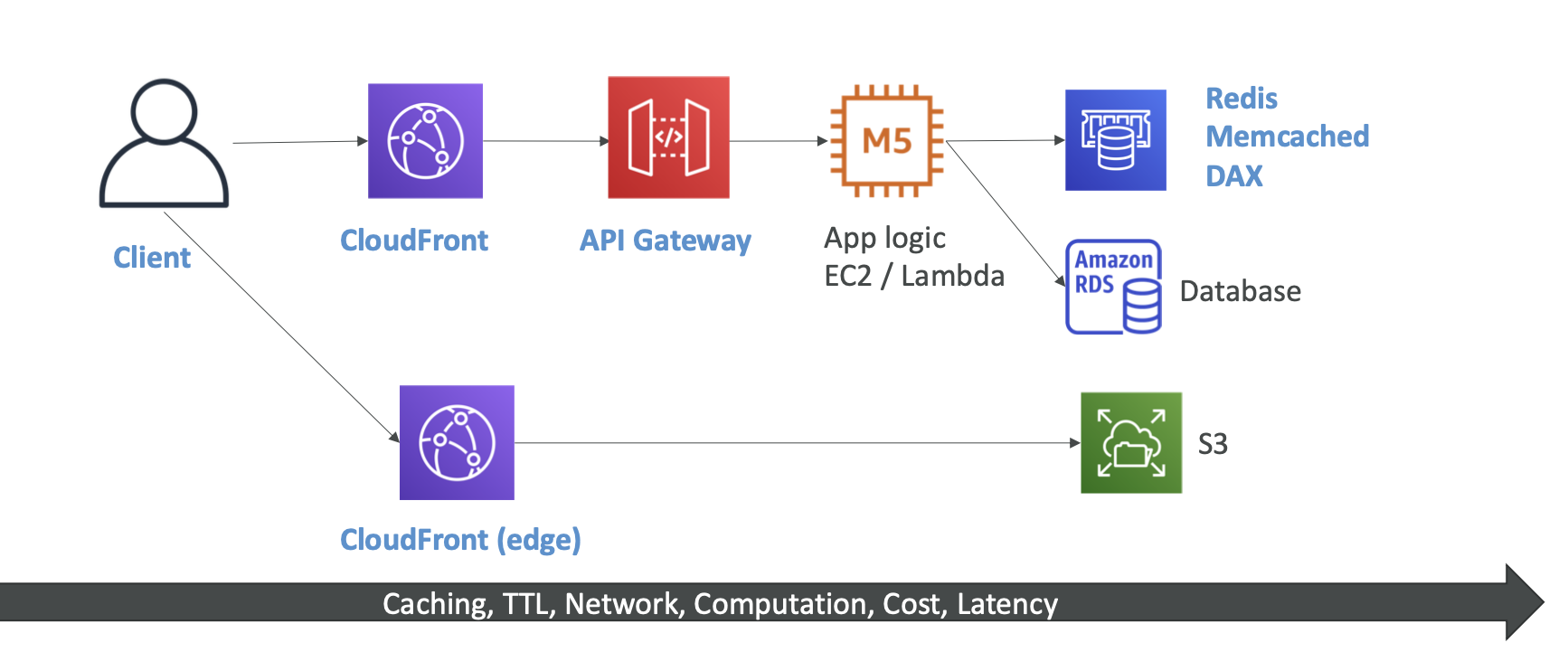

CloudFront

CDN

For improving read performance, content is cached in the Edge Location

can integrate with Shield and WAF for DDoS protection

can expose HTTPS and can talk to internal HTTPS backends

It can be used to cache web content from the origin server to provide users with low latency access, and offload origin server loads

For files less than 1 Gb, using CloudFront would provide better performance than S3 Transfer Acceleration

CloudFront Origins

S3 Bucket

- cache content at the edge

- enhanced security with Cloud Origin Access Identity (OAI)

- can be as an ingress

Custom Origin(HTTP)

ALB

EC2

S3 website

Route 53

any HTTP backend

Distribution

A collection of Edge Locations

CloudFront vs S3 CRR

- CloudFront

- great for static content

- files cached for a TTL

- S3 CRR

- great for dynamic content

- near real-time

- only read

- CloudFront

OAI (Origin Access Identity)

Using OAI to restrict S3 to be accessed only by this identity

Signed URL

Using SDK API to generate Signed URL for restricting visit.

Signed URL to CloudFront, and OAI to S3 can create a simple media sharing website.

- Signed URL: Access to one file

- Signed Cookie: Access to a bunch of files

The data transfer out to the internet or origin is not free. Adifferent rate is charged depending on the region.

Data transfer from origin to CloudFront Edge Locations is free

Because for each custom SSL certificate associated with one or more CloudFront distributions using the Dedicated IP version of custom SSL certificate support, you are charged at $600 per month

If you want to increase the cache durationn for certain contents, you can add a Cache-Control header to control how long the objects stay in the CloudFront cache

Error page can be customized through CloudFront

Invalidating

- Invalidating the object removes it from the CloudFront edge cache to return the correct file to the user.

Redirect HTTP to HTTPs

- Configure the Viewer Protocol Policy of the CloudFront distribution to be “Redirect HTTP to HTTPs”

If you run PCI or HIPAA-compliant workloads based on the AWS Shared Responsibility Model, we recommend that you log your CloudFront usage data for the last 365 days for future auditing purpose. To log usage data, you can do the following

- Enable CloudFront access logs

- Capture requests that are sent to the CloudFront API

Query String Forwarding

- CloudFront Query String Forwarding only supports Web distribution. For query string forwarding, the delimiter character must always be a “&” character. Parameters’ names and values used in the query string are case sensitive. Parameter Names and Values should use the same case.

Global Accelerator

- For global users to access application that deployed in AWS, minimize latency and provide a straight connection to AWS resources

- Unicast IP vs Anycast IP

- Unicast IP: one server holds one IP address

- Anycast IP: all servers hold the same IP address and clients are routed to the nearest one

- Global Accelerator using Anycast IP

- 2 Anycast static IP addresses are created for your application

- Anycast IP send traffic to the Edge Location, then Edge Location send traffic to ALB or something else

- Improve performance and availability of the application

- Works with Elastic IP, EC2, ALB, NLB, public or private

- No caching

- DDoS protection by Shield

- Global Accelerator vs CloudFront

- Same

- Using Edge Locations around the world

- Integrate with Shield for DDoS

- Diff

- GA

- great for application serving global users

- all requests redirect from Edge Locations to AWS services, no caching

- great for TCP and UDP

- fast regional failover

- CloudFront

- great for static and dynamic content

- content is cached at the Edge Location

- GA

- Same

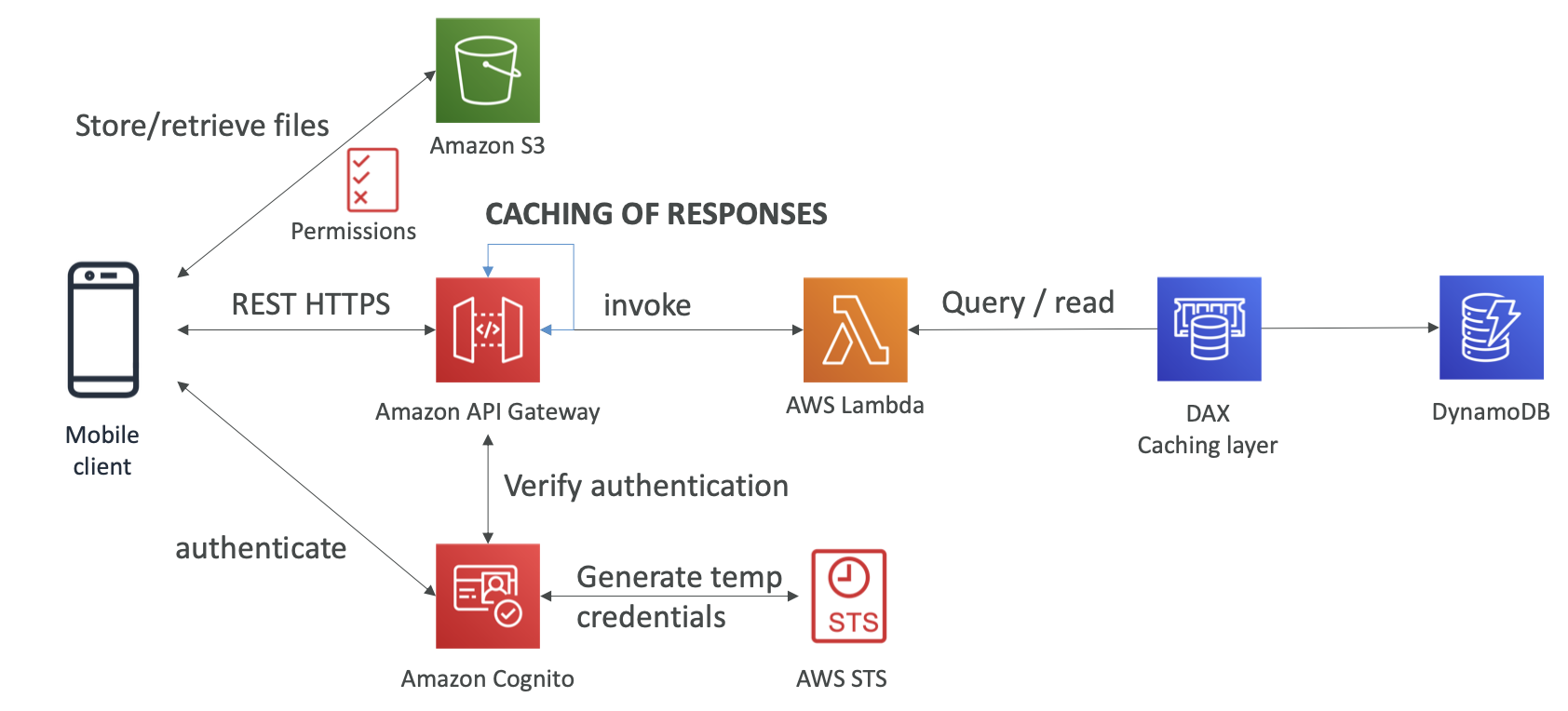

API Gateway

Support for WebSocket protocol

Handle API versioning

Handle different environments

Handle authentication and authorization

Handle request throttling

Cache API response

- Default TTL value for API Caching: 300s

- Maximum TTL value: 3600s (60m)

Integration Type

- Lambda

- Easy way to expose REST API backed by AWS Lambda

- HTTP

- Expose HTTP endpoints in the backends (HTTP API on premises, ALB)

- AWS Services

- Expose any AWS API through API Gateway (Step Function workflow, SQS)

- Mock

- VPC Link

- A way to connect to the resources within a private VPC

- Lambda

Endpoint Types

- Edge-Optimized (default): for global users

- through Edge Location

- API Gateway still lives in one region

- Regional: for clients within the same region

- Cloud manually combine with CloudFront (more control on caching strategies and distribution)

- Private

- Can only be accessed from VPC using ENI

- Edge-Optimized (default): for global users

Endpoint Integration inside a Private VPC

- You can also now use API Gateway to front APIs hosted by backends that exist privately in your own data centers, using AWS Direct Connect links to your VPC.

Authentication & Authorization

IAM Permissions

- Good for Authentication + Authorization

- For authorizing users which are inner ones

- Using Sig v4 capacity where IAM credentials are in headers

Lambda Authorizer (formerly Custom Authorizer)

- Good for Authentication + Authorization

- Using Lambda to verify token in headers being passed

- Option to cache result of authentication

- Helps to use OAuth / SAML / 3rd party type of authentication

- Lambda must retun an IAM policy for the user

Cognito User Pools

- Cognito helps with Authentication, not Authorization

- Cognito fully managed user lifecycle

- API Gateway automatically verify

Throttling Limit Setting

- Server-side throttling limits are applied across all clients. These limit settings exist to prevent your API

- Per-client throttling limits are applied to clients that use API keys associated with your usage policy as client identifier

Accout-level throttling per Region

- When request submissionns exceed the steady-state request rate and burst limits, API Gateway fails the limit-exceeding requests and returns 429 Too Many Request errors responses to the client.

- Burst limit corresponds to the maximum number of concurrent request submission that API Gateway can fulfill at any moment.

- Ex: given a burst limit of 5,000 and account-level rate limit of 10,000 request per second in the Region

- If the caller sends 10,000 in the first millisecond, API Gateway serves 5,000 of those reuqests and throttles the rest in the one-second period

Usage Plan

- A Usage Plan is a set of rules that operates as a barrier between the client and the target of the API Gateway. This set of rules be applied to one or more APIs and stages

- API Key must be associated with a usage plan, one or more; otherwise, it will not be attached to any API. Once attached, the API keys are applied to each API under the usage plan.

- API Key feature useful to filter unsolicited requests. It’s not a proper way to apply authorization to the API method

- Client put api key in request header “x-api-key”

Mehtod Level Throttling can override Stage Level Throttling in a Usage Plan

Controlling Access to an API in API Gateway

- Resource Policies

- Using resource policies to allow your API to be securely invoked by

- Users from a specified AWS account

- Specified source IP address ranges or CIDR blocks

- Specified VPC or VPC endpoints

- API Gateway resource policies are attached to resources, while IAM policies are attached to IAM entities

- Using resource policies to allow your API to be securely invoked by

- Standard AWS IAM roles and policies

- CORS

- Lambda Authorizers

- Amazon Cognito User Pools

- Client-side SSL Certificates

- Can be used to verify that HTTP requests to your backend system from API Gateway

- Usage Plans

- Resource Policies

Security Measures

- API Gateway supports throttling settings for each method in your APIs, you can set a standard rate limit and a burst limit per second for each method in your REST APIs. Further, API Gateway automatically protects your backend sysetems from distributed denial-of-service (DDoS) attacks, whether attacked with counterfeit requests (Layer 7) or SYN floods (Layer 3)

In Cache settings, the actions that you can do manually

- Flush entire cache

- Change cache capacity

- Encrypt cache data

Logs

CloudWatch Logs

- Loged data includes errors or execution traces (such as request or response parameter values or payloads)

Access Logging

- In access logging, as an developer, want to log who has accessed your API and how the caller accessed the API. You can create your own log group or choose an existing one, which could be managed by API Gateway

Permissions

- Controlling access to API Gateway with IAM permissions by controlling access to the two API Gateway component processes

- Management Component

- create, deploy, and manage an API in API Gateway

- must grant the API developer permissions

- Execution Component

- call a deployed API or refresh teh API caching

- must grant the API caller permissions

- Management Component

- Controlling access to API Gateway with IAM permissions by controlling access to the two API Gateway component processes

RDS

RDS Backups

- Automated Backups

- daily full backup of the database (during the maintenance window)

- every 5 minutes backup transaction logs

- 7 days retention

- Storage I/O may be suspended during backup

- DB Snapshots

- Automated Backups

During automated backup, RDS creates a storage volume snapshot of the entire Database Instance. RDS uploads transaction logs for DB instances to S3 every 5 mins. To restore DB instance at a specific point in time, a new DB instance is created using DB snapshot.

If you disable automated backups, it disables point-in-time recovery.

RDS Read Replicas

- Up to 5 Read Replicas

- Within AZ, Cross AZ or Cross Region

- Replicas are ASYNC, so reads are eventually consistent

- Read Replicas can be promoted to DB

- Use case: split workload for BI, data analytics, etc…

- If you create your Read Replicas in another AZ, you need to pay connection fee between different AZs

RDS Multi AZ (DR)

- SYNC Replication

- One DNS Name - automatic failover to standby

- Read Replicas be setup as Multi AZ for DR

- provide enhanced availability and durability for DB instances.

RDS Encryption

- at rest encryption

- Using KMS and defined at launch time

- If the master don’t encrypt, read replicas also can’t be encrypted

- Transparent Data Encryption (TDE) is available for Oracle and SQL Server

- in-flight

- Using SSL to enforce SSL (PostgreSQL by set value, and MySQL by typing SQL command)

- at rest encryption

IAM database authentication

- works with MySQL and PostgreSQL

- You don’t need a password, just an authentication token obtained from IAM and RDS API calls

- Auth token has a lifetime of 15 minutes

RDS Failover Mechanism

- Failover mechanism automatically changes the DNS CNAME record of the DB instance to point to the standby DB instance

Solution for Read-Heavy

- read replicas

- ElastiCache

- Sharding the dataset

Solution for too many PUT

- Creating an SQS queue and store these PUT requests in the message queue and then process it accordingly

- DB Parameter Groups

- You manage your DB engine configuration through the use of parameters in a DB parameter group.

- DB parameter groups act as a container for engine configuration values that are applied to one or more DB instances.

- A default DB parameter group is created if you create a DB instance without specifying a customer-created DB parameter group.

- You can’t modify the parameter settings of a default DB parameter group. You must create your own DB parameter group to change parameter settings from their default value

- If you want to use your own DB parameter group, you simple create a new DB parameter group, modify the desired parameters, and modify your DB instance to use the new DB parameter group

Aurora

- Aurora cost more than RDS (20% more) - but is more efficient

- Data is hold in 6 replicas, across 3 AZs

- Auto healing capability

- Multi AZ, auto scaling read replicas

- Aurora database can be global for DR or latency purpose

- Auto scaling storage from 10GB - 64TB

- Aurora Serverless Option

- Support for CRR

- Aurora can span multiple regions by Aurora Global Database

DynamoDB

Highly avaialbe with replication across 3 AZs

Distributed NoSQL database

Integrate with IAM for authentication and authorization

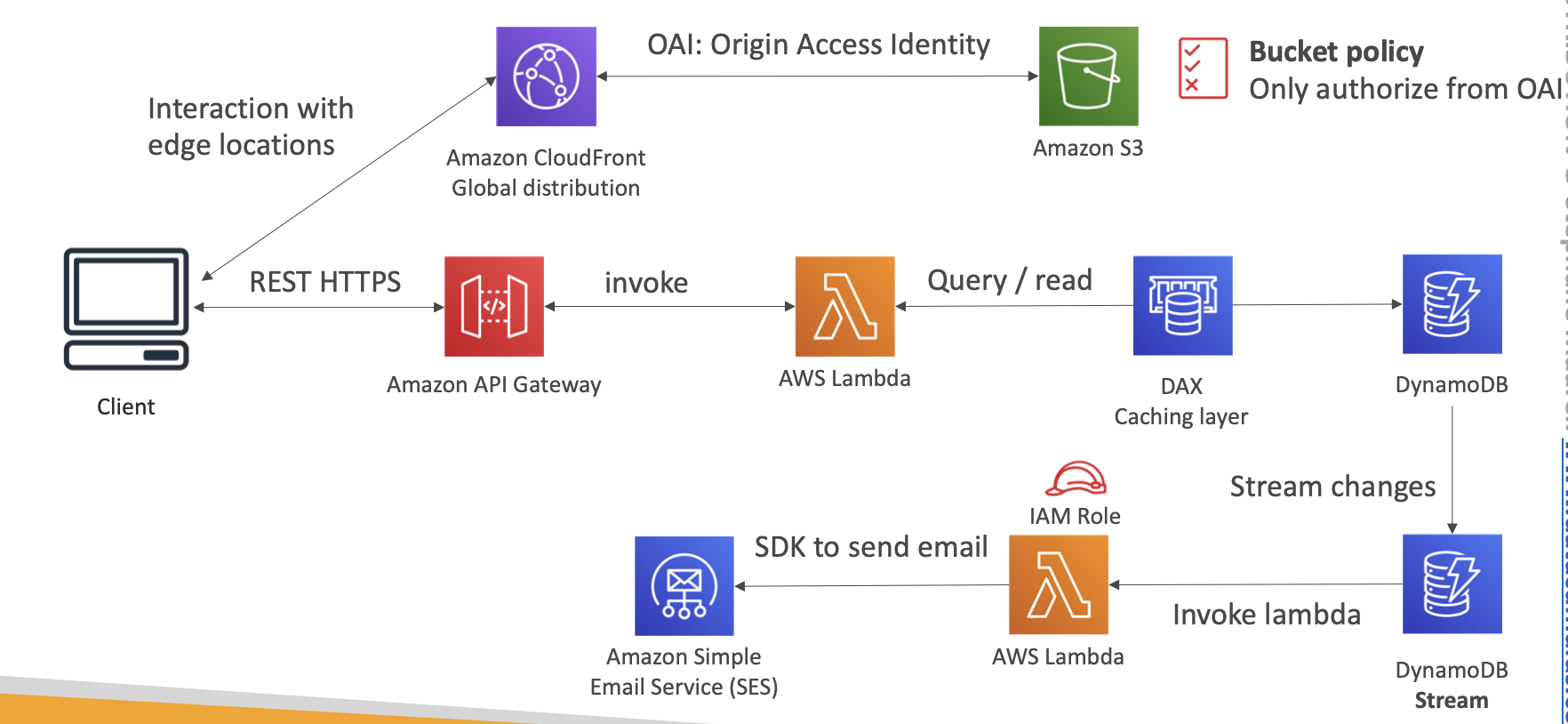

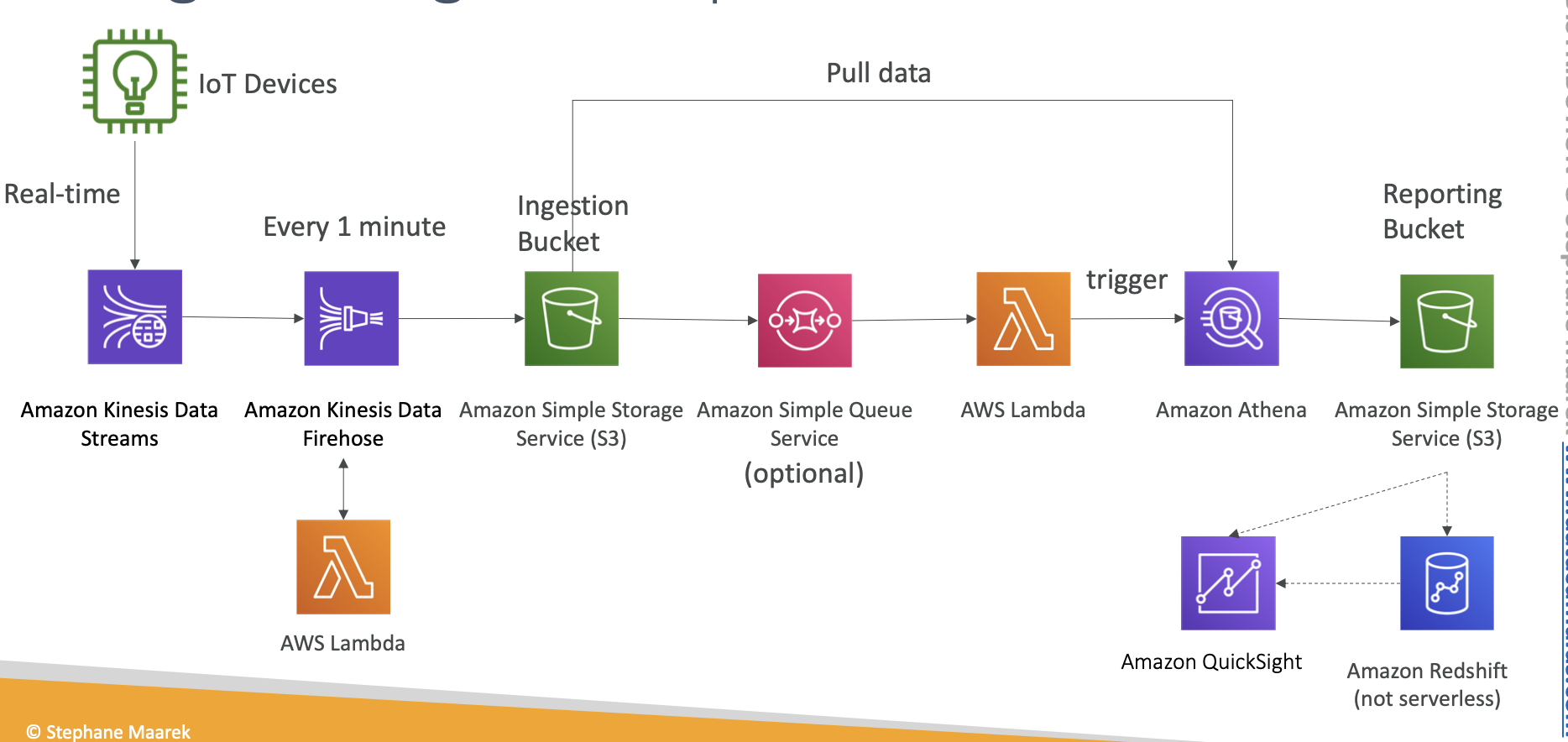

Enable event driven progarmming with DynamoDB Streams

Features

- DynamoDB is made of tables

- each table has a primary key

- each table has a infinite number of items

- each item has attributes

- max size of item is 400KB

Provisioned Throughputs

- Table have provisioned read and write capacity units

- Read Capacity Unit (RCU): throughput for reads

- 1 RCU = 1 strongly consistent read of 4KB per second

- 1 RCU = 2 eventually consistent read of 4KB per second

- Write Capacity Unit (WCU): throughput for writes

- 1 WCU = 1 write of 1KB per second

- Option to setup auto-scaling of throughput

- Throughput can be exceeded temporarily using “burst credit”

- If burst credit are empty, you’ll get a “ProvisionedThroughputException”

DynamoDB Accelerator (DAX)

- Seamless cache for DynamoDB, no application re-write

- Writes go through DAX to DynamoDB

- Solves the Hot Key Problem

- 5 minutes TTL for cache by default

- up to 10 nodes in the cluster

- Multi AZ

DynamoDB Streams

It can monitor the changes to a DynamoDB table.

this stream can be read by Lambda, and then we can do

- react to changes in real time

- analytics

- create derivative tables/views

- insert into ElasticSearch

using Streams to implement CRR

Stream has 24 hours of data retention

When you enable DynamoDB Streams on a table, you can associate the stream ARN with a Lambda function that you write. Immediately after an item in the table is modified, a new record appears in the table’s stream. Lambda polls the stream and invokes your Lambda function synchronously when it detects new stream records.

Transaction

- Coordinate with Insert, Update, Delete across multiple tables

- Include up to 10 unique items or up to 4 MB of data

On Demand Option

- No capacity planning needed (WCU/RCU) - scales automatically

- 2.5x more expensive than provisioned capacity

- Global Tables

- Multi region, fully replicated, high performance

- Must enable DynamoDB Streams

- Useful for low latency, DR purposes

- Capacity Planning

- Planned capacity: Provisioned WCU & RCU, can enable auto scaling

- On-demand capacity: get unlimited WCU & RCU, no throttle, more expensive

- DMS can migrate data from Mongo, Oracle, MySQL, S3, etc… to DynamoDB

- Better for storing metadata

- It doesn’t have the feature of Read Replica

- Auto Scaling

- With DynamoDb Auto Scaling, it can automatically increase its write capacity for the spike and decrease the throughput after the spike.

- It’s good for applications where database utilization cannot be predicted.

- It can help to scale dynamically to any load for both DynamoDB tables and Global Secondary Index

Lambda

Pay per request and compute time

Lambda is good for running code, not packaging

Lambda Limits - per region (apply to configuration, deployments, and execution)

- Function Memory allocation

- 128MB - 3008MB (64MB increments)

- Function Timeout

- 900s (15m)

- Function Environment Variables

- 4KB

- Function Resource-based Policy

- 20KB

- Function Layers

- 5 layers

- Function burst concurrency

- 500 - 3000 (varies per Region)

- Invocation Payload (request and response)

- 6 MB (synchronous)

- 256 KB (asynchronous)

- Deployment Package

- 50 MB (zipped)

- 256 MB (unzipped)

- Disk

- Disk capacity: 512MB

- Can use /tmp directory (500MB) to load other files at startup

- Function Memory allocation

Lambda@Edge

- Use case

- When you want to run a global AWS Lambda, and build more responsive applications

- Implement request filtering before reaching your application

- Viewer request

- Origin request

- Origin response

- Viewer response

- Website Security and Privacy

- Dynamic Web Application at the Edge

- SEO

- A/B Testing

- User Prioritization

- User Tracking and Analytics

- User Authentication and Authorization

- Lambda@Edge function does provide the capability to cutomize content. Lambda@Edge allows users to run their own Lambda functions to customize the content that CloudFront delivers, executing the functions in AWS Regions close to the viewer. Lambda functions runn in response to CloudFront events, without provisioning or managing servers.

- Use case

By default Lambda run in No VPC

Disadvantage for Serverless services: Cold Start

Lambda funcntion environment variables are used to configure additional parameters that can be pssed to lambda function

Services that invoke Lambda Functions Synchronously

- ELB (ALB)

- Cognito

- API Gateway

- CloudFront (Lambda@Edge)

- Kinesis Firehose

- Step Functions

- S3 Batch

- Lex

- Alexa

Services that invoke Lambda Fuctions Asynchronously

- S3

- SNS

- SES

- CloudFormation

- CloudWatch Logs

- CloudWatch Events

- CodeCommit

- CodePipeline

- Config

- IoT

- IoT Events

Lambda supports the following poll-based services

- Kinesis

- DynamoDB

- SQS

- Debugging and error handling

For asynchronous invocation

If you don’t specify a DLQ for failed event, this event will be discard after several failed retries

DLQ Resources

- SNS

- SQS

If your functions runs out of memeory, the Linux kernel will kill your process immediately. There is no supported way at this time to catch and handle this error either.

When using Lambda, you are only responsible for your code. AWS will perform provisioning capacity, monitoring, deploying your code and logging on your behalf.

Lambda event source mappings support SQS standard and SQS FIFO

Event Source Options

- Enabled

- A flag to signal Lambda that it should start polling your SQS queue

- EventSourceArn

- The ARN of your SQS queue that Lambda is monitoring for new messages

- FunctionArn

- The Lambda function to invoke

- BatchSize

- The number of records to send to the function in each batch. For a standard queue this can be up to 10,000 records. For a FIFO queue the maximum is 10.

- Enabled

You can also invoke a Lambda function by Lambda’s invoke API.

When you updating a Lambda function, there will be a brief window of time, typically less than a minute, when requests could be served by either the old or the new version of your function.

Lambda Function ARN

- Qualified ARN - with version suffix

- arn:aws:lambda:aws-region:acct-id:function:helloworld:$LATEST

- Unqualified ARN - without version suffix

- arn:aws:lambda:aws-region:acct-id:function:helloworld

- You cannot use Unqualified ARN to create an alias.

- This Unqualified ARN will invoke a LATEST version

- Qualified ARN - with version suffix

Lambda Alias

- Invokers don’t need to change Lambda ARN when usig Lambda Alias, creators just need to remap Lambda Alias to a new version of Lambda after publishing a new version

Publishing Lambda

- When you publish a version, Lambda makes a snapshot copy of the Lambda function code (and configuration) in the $LATEST version. A published version is immutable (both code and configuration).

Version numbers are never reused, even for a function that has been deleted and recreated

Not recommended for using $LATEST ARN in PRODUCTION mode, there are chances that the configuration can be meddled and can cause unwanted issues.

Function Policy

- Grant cross-account permissions (not on the execution role policy)

- function policy cannnot be edited from the AWS console (using either CLI or SDK)

Lambda accessing Private VPC

- If your Lambda function accesses a VPC, you must make sure that your VPC has sufficient ENI capacity to support the scale requirements of your Lambda function. Using formula to determine the ENI capacity

- Peak cocurrency executions = Peak Requests per Second * Average Function Duration (in seconds)

- ENI capacity = Projected peak concurrent execution * (Memory / 3 GB)

Enviroment Variable Encryption

- By default, all data in environment variables are encrypted by KMS, then automatically decrypted to Lambda code. (not encrpted during deployment process, only after deployment)

- Using encryption helper and decryption helper to encrypted and decrypted sensitive data during deployment

AWSLambdaBasicExecutionRole

- Grants permissions only for CloudWatch Logs actions to write logs

- Contains

- logs:CreateLogGroup

- logs:CreateLogStream

- logs:PutLogEvents

CloudWatch metrics for Lambda

- Dead Letter Error

- Duration

- Invocation

Memory

Ensuring version of Lambda in the code

- Using Method

- getFunctionVersion()

- Using Environment Variables

- AWS_LAMBDA_FUNCTION_VERSION

- Using Method

Errors for the response of Lambda

- Synchronous invocation

- response header: X-Amz-Function-Error

- The status code is 200 for function error

- Asynchronous invocation

- Stored in DLQ if you specified a DLQ for errors

- Synchronous invocation

Users are charged based on the number of requests and the time it taks for the code to execute.

The duration price depends on the amount of memory allocated to the function.

Lambda function’s cost will be reduced if the execution duration decreases

CloudWatch

- CloudWatch Metrics

- Dimension is an attribute of metric (instance ID, environment, etc…)

- Up to 10 dimensions per metric

- Metrics have timestamps

- Metrics belong to namespaces

- EC2 instance metrics can monitor “every 5 minutes”, and you can also change to “every 1 minute”

- Using detailed monitoring if you want to prompt scale your ASG in EC2

- EC2 memory usage must be created for Custom Metrics

- Custom Metrics

- Standard: 1 minutes

- High resolution: up to 1 second, but higher cost

- Use API called PutMetricData and Use exponential back off in case of throttle errors

- Dashboards

- Dashboards are global, can include graphs from different regions

- CloudWatch Logs

- CloudWatch can collect log from

- Elastic Beanstalk

- ECS

- AWS Lambda

- VPC Flow Logs

- API Gateway

- CloudTrail based on filter

- CloudWatch log agents: ex. EC2

- Route 53: Log DNS queries

- can go to

- to S3 for archive

- to ElasticSearch for further analytics

- encrytion using KMS

- CloudWatch can collect log from

- CloudWatch Logs Insights: can be used to query logs and queries to CloudWatch Dashboards

- CloudWatch Logs Agent vs CloudWatch Unified Agent

- Logs Agent

- Old version

- Can only send to CloudWatch Logs

- Unified Agent

- Collect additional system level metrics such as RAM, processes, etc…

- Centralized configuration using SSM Parameter Store

- Logs Agent

- CloudWatch Unified Agent - Metrics

- CPU

- Disk metrics

- RAM

- Netstat

- Processes

- Swap Space

- CloudWatch Alarm

- trigger notification for any metrics

- Alarm can used for

- ASG

- EC2 Action

- SNS notification

- Alarm States

- OK

- INSUFFICIENT_DATA

- ALARM

- Period

- High resolution custom metrics: can only choose 10 sec or 30 sec

- CloudWatch Events

- Schedule: Cron jobs

- Event Pattern: Event rules to react to a service doing something

- Trigger to

- Lambda

- SQS

- SNS

- Kinesis Messages

- When target is Lambda, the inputs can be

- Matched event

- Part of the matched event

- Constant (JSON text)

CloudTrail

- Provides governance, compliance and audit for your AWS account

- CloudTrail is enable by default

- Get an history of events / API calls made within your AWS account by

- Console

- SDK

- CLI

- AWS Services

- To ensure logs have nont tampered with you need to turn on Log File Validation

- CloudTrail can be set to log across all AWS accounts in an organization and all regions in an account

- CloudTrail will deliver log files from all regions to S3 bucket and an optional CloudWatch Logs log group you specified.

- Two types of events

- Management Events

- Tracks management operations, turn on by default

- Data Events

- Tracks specific operations for specific AWS services, turn off by default

- Management Events

- By default, CloudTrail event log files are encrypted using S3 server-side encryption (SSE-S3). You can also choose to encrypt your log files with an KMS key.

- Log File Integrity

- After you enable CloudTrail log file integrity, it will create a hash file called digest file, which refers to logs that are generated. The Digest file can be validated using the public key. This feature ensures that all the modifications made to CloudTrail log files are recorded.

- Global Service Events Logging

- For most services, events are recorded in the region where the action occurred.

- For Global services such as IAM, and CloudFront, events are delivered to any trail that includes global services

- For most global services, events are logged as occurring in US East (N. Virginnia) Region, but some global service events are logged as occurring in other regions, such as US East (Ohio) Region or US West (Oregon) Region.

- If you change the configuration of a trail from logging all regions to logging a single region, global service event logging is turned off automatically for that trail

- For eliminating duplicate logs in all regions, you can disable Global Service Event in all regions and enable them in only one region

SQS

Unlimited throughputs, unlimited number of messages in queue

Default retention of messages: 4days, maximum is 14 days

Low latency ( < 10ms on publish and receive)

Limitation of 256KB per message sent

It helps in horizontal scaling of AWS resources and is used for decoupling systems.

SQS Access Policies

- Useful for cross-account access to SQS queues

- Useful for allowing other services (SNS, S3…) to write to an SQS queue

Message Visibility Timeout

- After a message is pulled by a consumer, it becomes invisible to other consumers

- By default, the “message visibility timeout” is 30 seconds, 12 hours maximum

- A consumer could call the “ChangeMessageVisibility” API to get more time

Dead Letter Queue

- Make sure to process the messages in the DLQ before they expire (Good to set a retention of 14 days in the DLQ)

Delay Queue

- Delay a message up to 15 minutes

- Default is 0 seconds

- Can set a default at queue level

Standard Queue

- Unlimited number of transactions per second

FIFO Queue

- Limited throughput: 300 msg/s without batching, 3000 msg/s with

- Exactly-once send capability

Queuing vs Streaming

- Queuing

- Generally will delete messages once they are consumed

- Not real-time

- have to pull

- Streaming

- Multiple consumers can react to events

- Event live in the stream for long periods of time, so complex operations can be applied

- Real-time

- Queuing

Amazon SQS Extended Client Library for Java

- Lets you send messages 256KB to 2GB in size

- The message will be stored in S3 and library will reference the S3 object

Short Polling vs Long Polling

- Short Polling (default)

- When you need a message right away, short polling is what you want

- Long Polling (most used)

- Maximum 20 seconds

- reduce cost

- Benefits for Long Polling

- Eliminate empty responses

- Eliminate false responses

- Return messages as soon as they become available

- Short Polling (default)

SQS doesn’t delete messages automatically

Permission exist on the Queue level, not on the message level

SQS Batch Actions

- To reduce costs and manipulate up to 10 messages with a single action, you can use the following actions

- SendMessageBatch

- DeleteMessageBatch

- ChangeMessageVisibilityBatch

- To reduce costs and manipulate up to 10 messages with a single action, you can use the following actions

DeleteMessage

- Deletes the specifed message from the specified queue. Using the ReceiptHandle of the message (not the MessageId which you receive when you send the message)

SQS Encryption

- SQS does not encrypt messages by default. You need to enable encryption on the Queue messages.

How to process data with priority

- Use two SQS queues, one for high priority messages and the other for default priority. The high priority queue can be polled first.

Queue Size Metrics

- ApproximateNumberOfMessagesVisible describes the number of messages available for retrieval. It can be used to decide the queue length.

Increasing Throughput

SQS queues can deliver very high throughput.

Horizontal Scaling

- To achieve high throughput, you must scale message producers annd consumers horizontally (add more producers and consumers)

- Horizontal scaling involves increasing the number of message producers and consumers in order to increase your overall queue throughput. You can scale horizontally in three ways

- Increase the number of threads per client

- Add more client

- Increase the number of threads per client and add more clients

Action batching

- Batching performs more work during each round trip to the service.

SNS

All messages published to SNS are stored redundantly across multiple AZs

It’s a real-time notification.

Integrate with AWS services

- CloudWatch (for alarms)

- ASG notification

- S3 (on bucket events)

- CloudFormation (upon state changes)

Publish

- Topic Publish (using SDK)

- Create a topic

- Create a subscription (or many)

- Publish to the topic

- Direct Publish (for mobile apps SDK)

- Create a platform application

- Create a platform endpoint

- Publish to the platform endpoint

- Works with Google GCM, Apple APNS, Amazon ADM, etc…

- Topic Publish (using SDK)

SNS Access Policies

- Useful for cross-account access to SNS topics

- Useful for allowing other services to write to an SNS topic

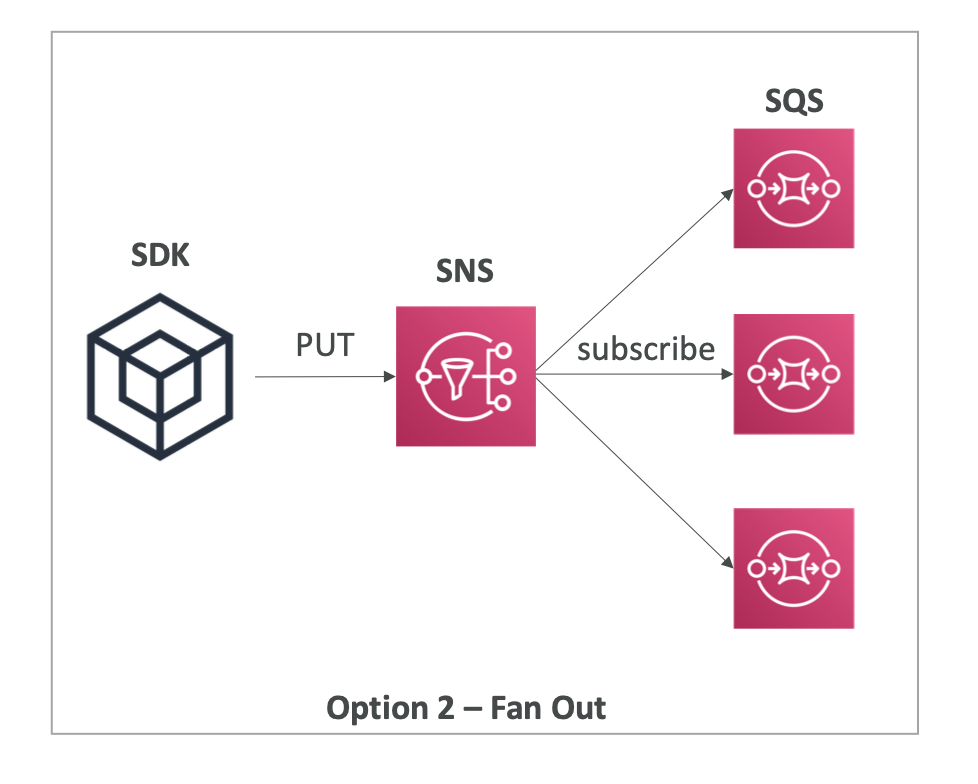

SQS + SNS: Fan Out

- Push once in SNS, receive in all SQS queues

- Fully decoupled, no data loss

- SQS allows

- data persistence

- delayed processing

- retries of work

- Make sure your SQS queue access policy allows for SNS to write

- SNS cannot send messages to SQS FIFO queues (AWS Limitation)

- Use Case

- S3 Events to multiple queues (if you want to send the same S3 event to many SQS queues, use fan-out)

Subscribers do not pull for messages (not like SQS)

Messages are instead automatically and immediately pushed to subscribers

SNS Topic

- Topic allows you to group multiple subscriptions together

- When topic deliver messages to subscribers it will automatically format your message according to the subscriber’s chosen protocol

- A topic is able to deliver to multiple protocol at once

- HTTP and HTTPS: create web hooks into your web application

- Email-JSON

- SQS

- Lambda

- SMS

- Platform application endpoint: Mobile Push

Delivery protocols for receving notification from SNS

- HTTP

- HTTPS

- Email-JSON

- SQS

- Application

- Lambda

- SMS

- SNS Message Filtering - Using Filter Policy

By default, a subscriber of an Amazon SNS topic receives every message published to the topic. A subscriber assigns a filter policy to the topic subscriptionto receive only a subset of the messages. A filter policy is a simple JSON obiert. The policy contains attributes that define which messages the subscriber receives.

- Message Attribute Items

- Name

- Type

- Value

MessageId

Messaging

- Two patterns of application communication

- Sync: can be problematic if there are sudden spikes of traffic

- Async

- SQS: queue model

- SNS: pub/sub model

- Kinesis: real-time streaming model

KMS

- Integrate with

- EBS

- S3

- Redshift

- RDS

- SSM - Parameter Store

- KMS can help in encrypting up to 4KB of data per call, if data > 4KB, use envelope encryption

- Envelope Encryption

- Encrypt your data key by your Customer CMK, then delete plain text data key. Keep encrypted data key and encrypted data stored in S3.

- When you need to decrypt data in S3, you first decrypt your data key by your CMK, then decrypt data by decrypted data key.

- If data is more than 4KB, using Envelope Encryption

- Three types of CMK

- AWS Managed Service Default CMK: free

- User Keys created in KMS: $1 / month

- User Keys imported (must be 256-bit symmetric key): $1 / month

- To give access to KMS to someone

- Make sure the Key Policy allows the user

- Make sure the IAM Policy allows the API calls

- KMS is regional specific. When you copy snapshot over, you need to re-encrypt your snapshot with a new key

- Keys are not transferrable out of the region they were created in. Keys are also region-specific.

- KMS Key Policies

- Control access to KMS keys

- You cannot control access without Key Policy

- Default KMS Key Policy

- Created if you don’t provide a specific KMS Key Policy

- Complete access to the key to the root user = entire AWS account

- Give access to the IAM policies to the KMS key

- Custom KMS Key Policy

- Define users, roles that can access the KMS key

- Define who can administer the key

- Useful for cross-account access of your KMS key

- Unauthorized KMS master key permission error

- In the KMS key policy, assign the permission to the application to access the key

- Key Rotation

- KMS will rotate keys annually and use the appropriate keys to perform cryptographic operations.

CLI & SDK

- Access Key ID and Secret Access Key are collectively known as AWS Credentials

CloudFormation

- For reducing cost:

- You can estimate the costs of your resources using the CloudFormation template

- Saving strategy: In dev, you could automatically delete templates at 5PM and recreate at 8AM, safely

- You can many stacks for many apps, and many layers

- Templates have to be uploaded in S3 and then referenced in CloudFormation

- To update a template, you can’t update it, you have to re-create a new version of that template

- Tempaltes Components

- Resources (Mandatory)

- Parameters: dynamic inputs for your template

- Mappings: the static variables for your template

- Outputs

- Conditionals

- Metadata: additional information about template

- When CloudFormation encounters an error, it will rollback with ROLLBACK_IN_PROGRESS

- CreationPolicy

- CreationPolicy is invoked only when CloudFormation creates the associated resource.

- The resources that support CreationPolicy

- AppStream::Fleet

- AutoScaling::AutoScalingGroup

- EC2::Instance

- CloudFormation::WaitCondition

- DeletionPolicy

- DeletionPolicy attribute

- With the DeletionPolicy attribute you can preserve, and in some cases, backup a resource when its stack is deleted. You specify a DeleteionPolicy attribute for each resource that you want to control. If a resource has no DeletionPolicy attribute, CloudFormation deletes the resource by default

- If you want to modify resources outside of CloudFormation, use a retain policy and then delete the stack. Otherwise, your resources might get out of sync with your CloudFormation template and cause stack errors

- DeletionPolicy options

- Delete

- the defualt DeletionPolicy of the most services is delete. It means CloudFormation will delete the resources and its content during stack deletion

- But some services are not

- RDS::DBCluster resources, default is Snapshot

- RDS::DBInstance resources, default is Snapshot

- S3 buckets, you must delete all objects in the bucket for deletion to succeed

- Retain

- Keeps the resource without deleting the resource or its content when its stack is deleted.

- Snapshot

- CloudFormation will create a snapshot for the resource before deleting it

- Resources that support snapshots

- EC2::Volume

- ElastiCache::CacheCluster

- ElastiCache::ReplicationGroup

- Neptune::DBCluster

- RDS::DBCluster

- RDS::DBInstance

- Redshift::Cluster

- Delete

- DeletionPolicy attribute

- Parameters on Template

- OnDemandPercentageAboveBaseCapacity

- SpotMaxPrice

- determine the maximum price that you are wiling to pay for Spot Instances

- Dirft Detection

- CloudFormation Dirft Detection can be used to detect changes made to AWS resources outside the CloudFormation Templates.

- It does not determine drift for property values that are set by default. To determine drift for these resources, you can explicitly set property values that can be the same as that of the default value.

- Resolving drift helps to ensure configuration consistency and successful stack operations

- CloudFormation Template

- A tempalte is a JSON- or YAML- formatted text file that describes your AWS infrastructure.

- Items

- Resources

- The required Resources section declares the AWS resources that you want to include in the stack, such as EC2 instance or S3 bucket

- Parameters

- Use the optional Parameters section to cutomize your templates. Parameters enable you to input custom values to your template each time you create or update a stack.

- Outputs

- The optional Outputs section declares output values that you can import into other stacks, return inn response, or view on the CloudFormation console. Ex, you can output the S3 bucket name for a stack to make the bucket easier to find.

- Mappings

- The optional Mappings section matches a key to a corresponding set of named values. Ex, if you want to set values based on a region, you can create a mapping that uses the region name as a key and contains the values you want to specify for each specific region.

- Rules

- The optional Rules section validates a parameter or a combination of parameters passed to a template during a stack update.

- Resources

Elastic Beanstalk

- Choose a platform, upload your code and it runs with little worry for developers about infrastructure knowledge

- No recommended for Production application

- Elastic Beanstalk is powered by a CloudFormation template setups for you

- ELB

- ASG

- RDS

- EC2 platforms

- Monitoring (CloudWatch, SNS)

- In-Place and Blue/Green elopement methodologies

- Security

- Can run Dockerized environments

- Beanstalk is free, but you pay the underlying infrastructure

- Enviroemnt Tier

- Web-Server Tier

- Serves HTTP requests

- Worker Tier

- Pulls tasks from an SQS queue

- Web-Server Tier

- Environment Types

- Load-balanced, scalable environemnt

- ELB + ASG + EC2

- Single-instance environment

- EC2 + Elastic IP

- Load-balanced, scalable environemnt

- Elastic Beanstalk component can create Web Server environments and Worker environments

- The worker environments in Elastic Beanstalk include an ASG and an SQS queue.

- It is not used for serverless applications.

- Terraform is an open-source infrastructure as code software tool to configure the infrastructure.

- Elastic Beanstalk supports the deployment of web applications from Docker containers. With Docker containers, you can define your own runtime environment. You can choose your own platform, programming language, and application dependencies that aren’t supported by other platforms.

- Note: Deploying Docker containers using CloudFormation is not an ideal choice.

- Elastic Beanstalk is an easy-to-use serivce for deploying and scaling web applications and services.

- We can retain full control over the AWS resources used in the application and access the underlying resources at any time

- Elastic Beanstalk vs ECS

- With ECS, you’ll have to build the infrastructure first before you can start deploying the Dockerfile

- With Elastic Beanstalk, you provide a Dockerfile, and Elastic Beanstalk takes care of scaling your provisioning of the number and size of nodes.

- Elastic Beanstalk vs CloudFormtaion

- Elastic Beanstalk is intended to make developers’ lives easier

- CloudFormation is intended to make systems engineers’ lives easier

- CloudFormation doesn’t automatically do anything.

Cognito

- Cognito User Pools (CUP)

- Sign in / Sign up functionality for app users

- Integrate with API Gateway

- Create a serverless database of user for your mobile apps

- Simple login: Username (or email) / password combination

- Possibility to verify emails / phone numbers and add MFA

- Can enable Federated Identity (Facebook, Google, SAML, ….)

- Sends back a JSON Web Token (JWT)

- CUP is a IdP

- Cognito Identity Pools (Federated Identity)

- Provide AWS credentials to users so they can access resources directly

- Integrate with User Pools as an identity provider